Machine Learning Loss Function Comparison

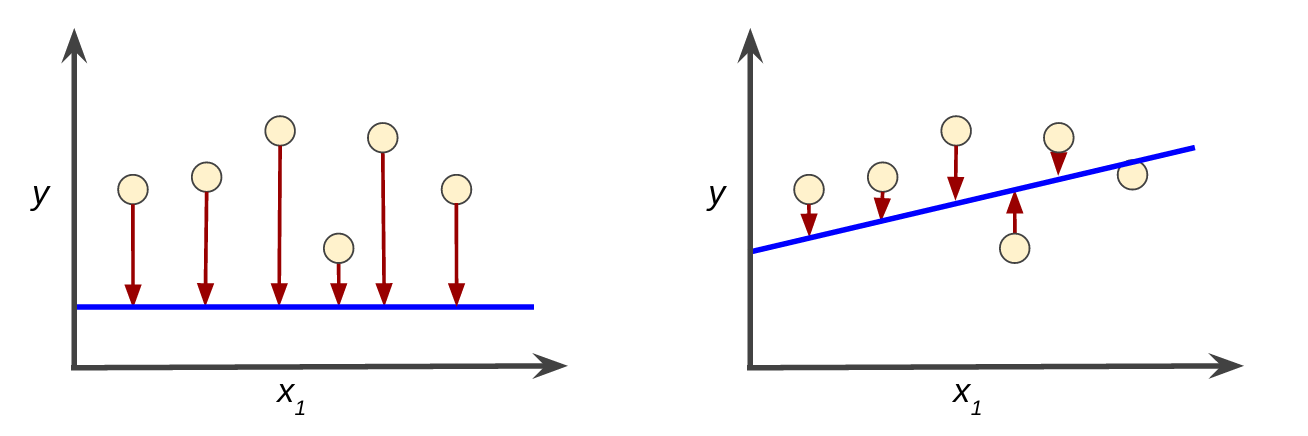

This computed difference from the loss functions such as Regression Loss Binary Classification and Multiclass Classification loss function. Design it to give a higher value when the two labels disagree more.

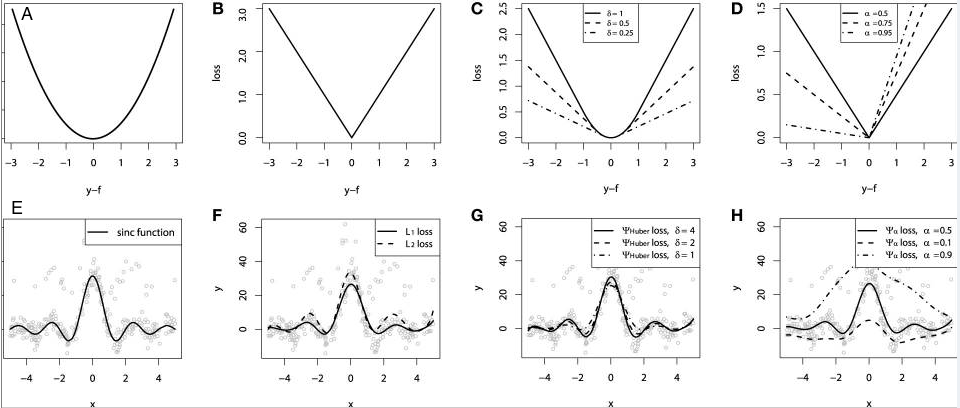

Https Www Ejournals Eu Pliki Art 9009

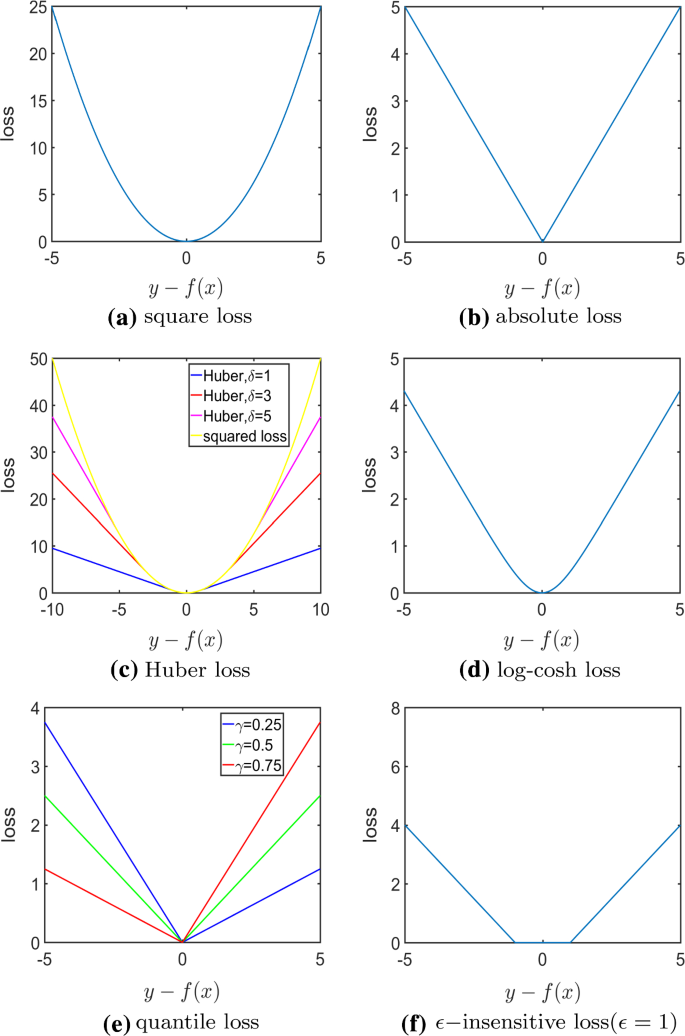

Its the second most ordinarily used Regression loss function.

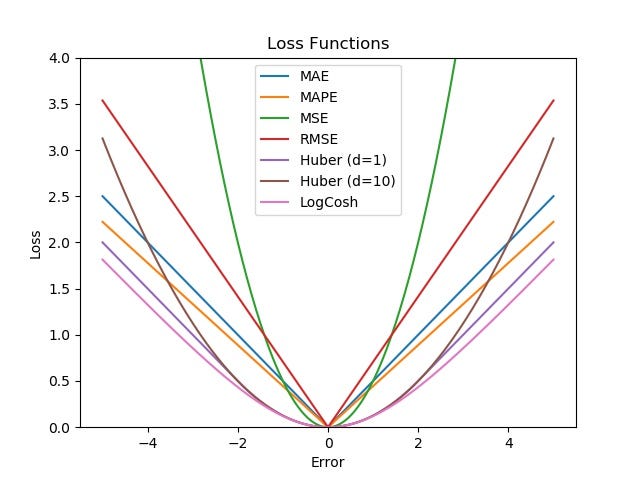

Machine learning loss function comparison. The first one is that they introduce errors when using estimated volatility to be the forecasting target and the second one is that their models cannot be compared to econometric models fairly. As An Error Function L1-norm loss function is also known as least absolute deviations LAD least absolute errors LAE. Often the label produced by your current model and the true label.

For the optimization of any machine learning. The function value is the Mean of these Absolute Errors MAE. There are various factors involved in choosing a loss function for specific problem such as type of machine learning algorithm chosen ease of calculating the derivatives and to some degree the percentage of outliers in the data set.

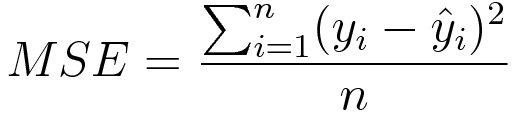

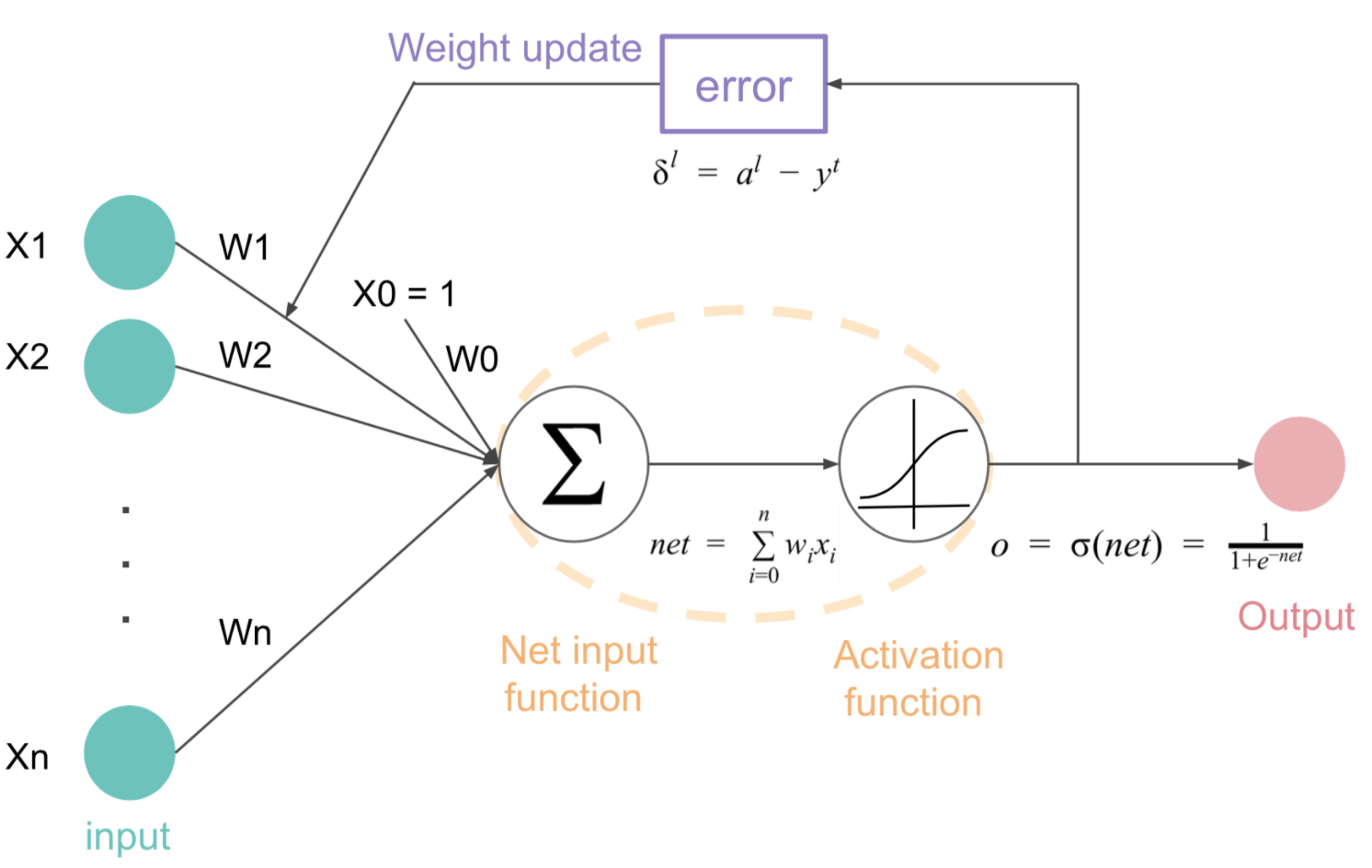

In Machine learning the loss function is determined as the difference between the actual output and the predicted output from the model for the single training example while the average of the loss function for all the training example is termed as the cost function. Most machine learning algorithms will define a. I would like to add to the answer by Jeremy McMinis.

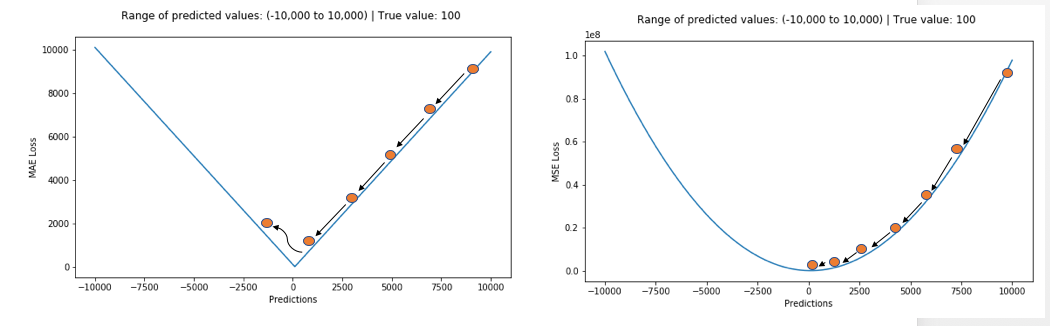

The MAE Loss function is additional strong to outliers compared to the MSE Loss function. The loss function is designed to optimize a neural network that produces embeddings used for comparison. Xa i x i a an anchor example.

The loss function serves to compare two output labels. 1 L1-norm vs L2-norm loss function. And 2 L1-regularization vs L2-regularization.

Most related research studies use distance loss function to train the machine learning models and they gain two disadvantages. The loss function operates on triplets which are three examples from the dataset. Machine learning is a pioneer subset of Artificial Intelligence where Machines learn by itself using the available dataset.

Usually the two decisions are. In the context of FaceNet xa i x i a is a photograph of a persons face. While practicing machine learning you may have come upon a choice of the mysterious L1 vs L2.

MSE loss performs as outlined because of the average of absolute variations between the particular and also the foretold value. Theres no one-size-fits-a l l loss function to algorithms in machine learning.

Cost Activation Loss Function Neural Network Deep Learning What Are These By Mohammed Zeeshan Mulla Medium

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

Introduction To Loss Functions

5 Regression Loss Functions All Machine Learners Should Know By Prince Grover Heartbeat

Introduction To Loss Functions

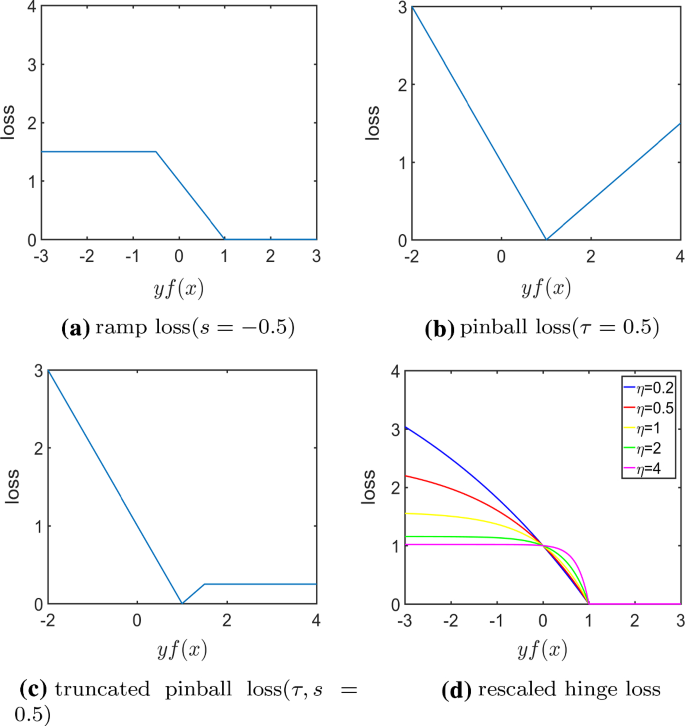

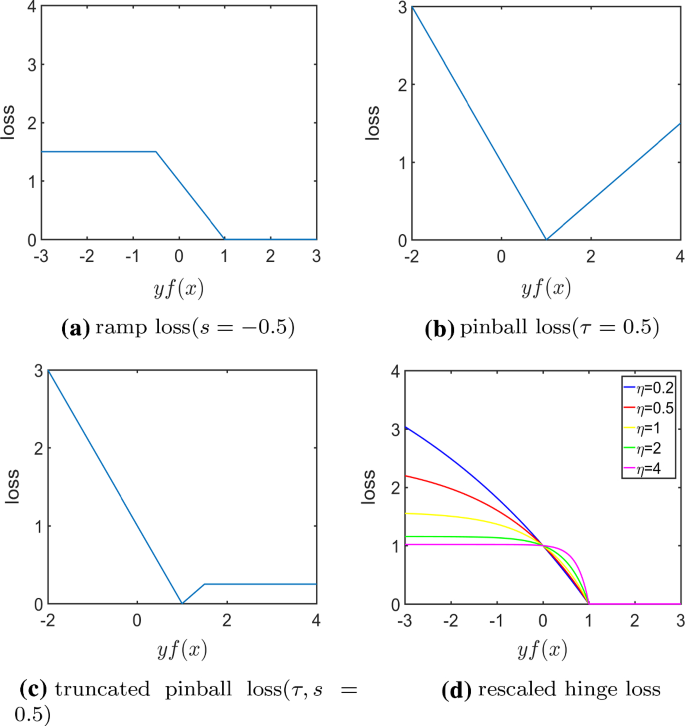

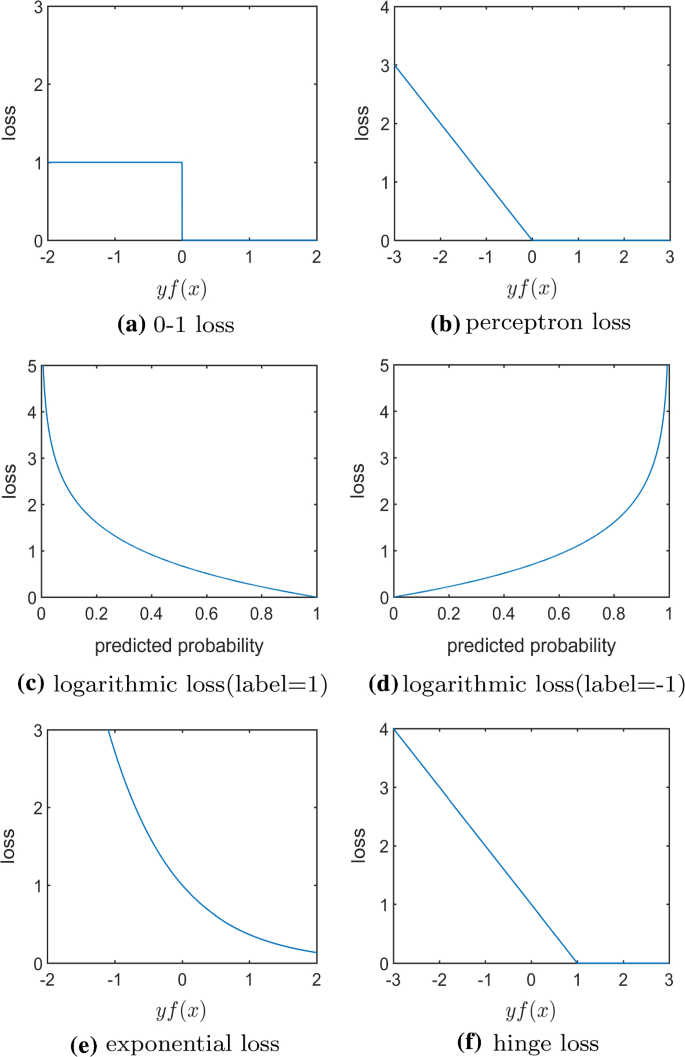

A Comprehensive Survey Of Loss Functions In Machine Learning Springerlink

A Comprehensive Survey Of Loss Functions In Machine Learning Springerlink

5 Regression Loss Functions All Machine Learners Should Know By Prince Grover Heartbeat

Introduction To Loss Functions

A Comprehensive Guide To Loss Functions Part 1 Regression By Rohan Hirekerur Analytics Vidhya Medium

Descending Into Ml Training And Loss Machine Learning Crash Course

Introduction To Loss Functions

A Comprehensive Survey Of Loss Functions In Machine Learning Springerlink

Common Loss Functions In Machine Learning By Ravindra Parmar Towards Data Science

Understanding Loss Functions In Machine Learning Engineering Education Enged Program Section

Loss Function Loss Function In Machine Learning

Understanding The 3 Most Common Loss Functions For Machine Learning Regression By George Seif Towards Data Science

Cost Activation Loss Function Neural Network Deep Learning What Are These By Mohammed Zeeshan Mulla Medium

Gentle Introduction To The Adam Optimization Algorithm For Deep Learning

Post a Comment for "Machine Learning Loss Function Comparison"