Machine Learning Gradient Descent Octave Code

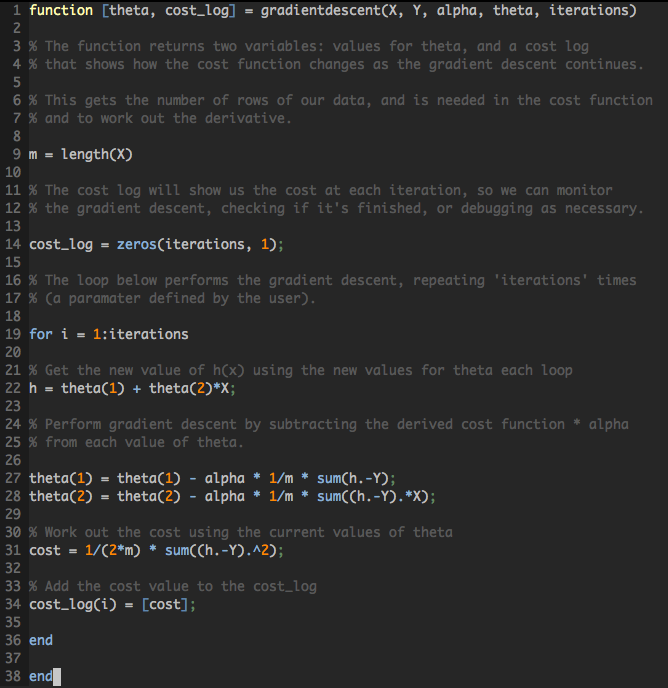

Compute theta values via batch gradient descent. It calculates what steps deltas should be taken for each theta parameter in order to minimize the cost function.

An Easy Guide To Gradient Descent In Machine Learning

Latest commit 797ed8a on May 27 2013 History.

Machine learning gradient descent octave code. Initialize some useful values. Add a column of ones to x. Before starting gradient descent we need to add the intercept term to every example.

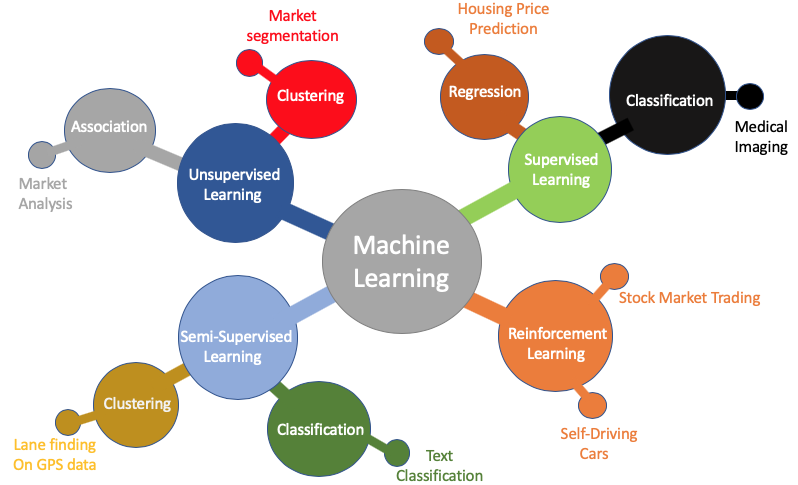

For example deep learning neural networks are fit using stochastic gradient descent and many standard optimization algorithms used to fit machine learning algorithms use gradient information. X j i for j 12n. A limitation of gradient descent is that it uses the same step size learning rate for each input variable.

Theta 0 theta 0 - alpha m X theta 0 - y. Number of training examples J_history zeros num_iters 1. To do this in MatlabOctave the command is.

For iter 1num_iters theta theta - alphamX Xtheta-y. X 3 x 3 - mu 3 sigma 3. Gradient Descent Octave Code.

M length y. 185k 2 2 gold badges 19 19 silver badges 43 43 bronze badges. Users who have contributed to this file.

J θ 0 θ 1 1 2 m i 1 m h θ x i y i 2. Ahawker Added ex1 through ex6. Function J grad lrCostFunction theta X y lambda LRCOSTFUNCTION Compute cost and gradient for logistic regression with regularization J LRCOSTFUNCTIONtheta X y lambda computes the cost of using theta as the parameter for regularized logistic regression and the gradient of the cost wrt.

Function theta J_history gradient_descent X y theta alpha lambda num_iterations Input. This is an example of code vectorization in OctaveMATLAB. Avoiding a loop here as we only have two theta parameters.

Function theta J_history gradientDescent X y theta alpha num_iters m length y. Gradient descent is not only up to linear regression but it is an algorithm that can be applied on any machine learning part including linear regression logistic regression and it is the complete backbone of deep learning. Gradient Descent Generic Function.

You should see a series of data points similar to the figure below. Ask Question Asked 2 years 10 months ago. Perform a single gradient.

θ j θ j α 1 m i 1 m h θ x i y i. T1 theta 1 - alpha 1 m sum X theta - y. Theta theta - alpha m X theta - y Xthis is the answerkey provided.

Good learning exercise both to remind me how linear algebra works and to learn the funky vagaries of OctaveMatlab execution. Predict2 1 7 theta. OctaveMATLAB array indices start from one not zero.

For iter 1num_iters. Function gradientDescent in Octave. Assuming x 0 1.

Ing to calculate the predictions. In MatlabOctave this can be executed with. This is the octave code to find the delta for gradient descent.

Theta GRADIENTDESENT. 32 lines 24 sloc 998 Bytes. For iter 1.

Mu mean x. First question the way i know to solve the gradient descent theta 0 and theta 1 should have different approach to get value as follow. Predict1 1 35 theta.

Sigma std x. 1 day agoSo the new technique came as Gradient Descent which finds the minimum very fastly. It was gratifying to see how much faster the code ran in vector form.

In order to understand what a gradient is you need to understand what a derivative is from the field of calculus. You should now submit your solutions. Function theta J_history gradientDescent X y theta alpha num_iters GRADIENTDESCENT Performs gradient descent to learn theta.

Y - a vector of expected output values - m x 1 vector. X 2 x 2 - mu 2 sigma 2. Gradient is a commonly used term in optimization and machine learning.

Theta - current model parameters - n. My answer key theta 1 theta 1 - alpha m X theta 1 -. Machine learning is the science of getting computers to act without being explicitly programmed.

Function theta J_history gradientDescent X y theta alpha num_iters GRADIENTDESCENT Performs gradient descent to learn theta theta GRADIENTDESCENTX y theta alpha num_iters updates theta by taking num_iters gradient steps with learning rate alpha Initialize some useful values m length y. X - training set of features - m x n matrix. Of course the funny thing about doing gradient descent for linear regression is that theres a closed-form analytic.

Num_iters YOUR CODE HERE Instructions. Number of training examples. The intuition behind Gradient Descent.

Number of training examples J_history zeros num_iters 1. This is the Programming assignment 1 from Andrew Ngs Machine Learning course. Follow edited Jul 12 18 at 1809.

Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. J_history zerosnum_iters 1. Zsiciarz Optimized gradientDescent Multi in octave code.

GRADIENTDESCENT Performs gradient descent to learn theta theta GRADIENTDESENTX y theta alpha num_iters updates theta by taking num_iters gradient steps with learning rate alpha Initialize some useful values. 23 Debugging Here are some things to keep in mind as you implement gradient descent. X 1.

AdaGrad for short is an extension of the gradient descent optimization algorithm that allows the step size in. In your program scale both types of inputs by their standard deviations and set their means to zero. Store the number of training examples x ones m 1 x.

In the past decade machine learning has given us self-driving cars practical speech recognition effective web search and a vastly improved understanding of the human genome. θ j θ j α d d θ j J θ 0 θ 1 Gradient Descent for Linear Regression. Of the cost function computeCost and gradient here.

Python Implementation Of Andrew Ng S Machine Learning Course Part 1 By Srikar Analytics Vidhya Medium

Machine Learning What Is Logistic Regression

What Is Linear Discriminant Analysis Lda

Discovery In Practice Predictive Analytics And Artificial Intelligence Science Fiction Or E Discovery Truth

Gradientdescent M Gradient Descent Implementation Machine Learning Youtube

Machine Learning And Artificial Intelligence Python Scikit Learn And Octave

Github Olgakorichkovskaya Machine Learning Octave Typical Machine Learning Problems And Algorithms In Octave Matlab Ex1 Linear Regression

An Easy Guide To Gradient Descent In Machine Learning

Machine Learning What Is Gradient Descent

Best Machine Learning Frameworks Ml For Experts In 2021

First Steps With Octave And Machine Learning Machine Learning Learning First Step

1 Machine Learning Coursera Second Week Assignment Solution Youtube

Univariate Linear Regression Using Octave Machine Learning Step By Step A Developer Diary

How To Learn Machine Learning In 31 Days Quora

Machine Learning Basics Brian Omondi Asimba

Gradient Descent In Practice Ii Learning Rate Coursera Machine Learning Learning Online Learning

Coursera Machine Learning Linear Regression Week 2 Assignment Week 2 Quiz Stanford Un Andrew Ng Youtube

Github Saiwebapps Machine Learning Exercise 1 Coursera Machine Learning Exercise 1

Octave Code For Univariate Linear Regression My First Implementation By Samuel Lynn Evans Medium

Post a Comment for "Machine Learning Gradient Descent Octave Code"