Wiki Boosting Machine Learning

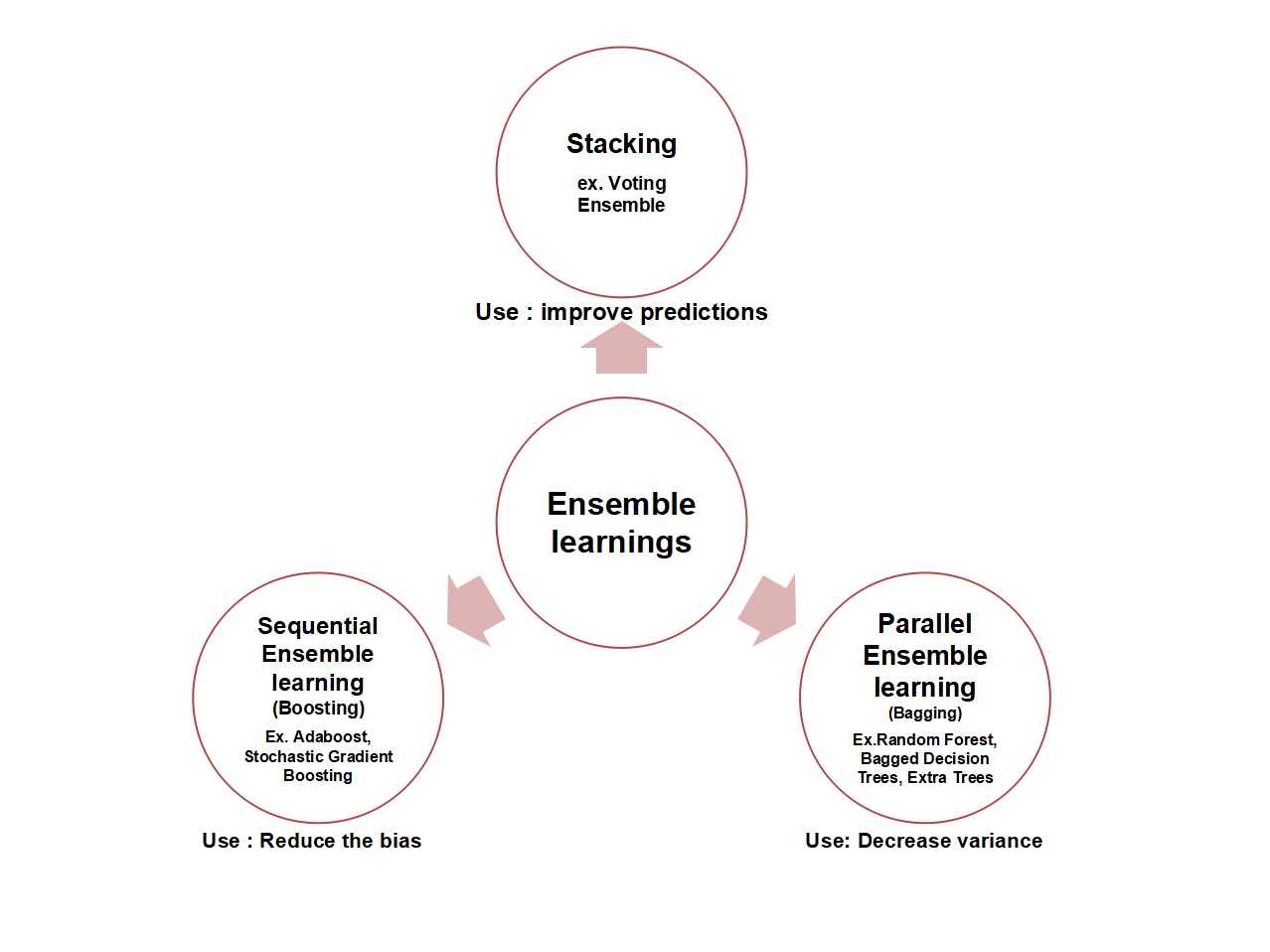

Tr a ditionally building a Machine Learning application consisted on taking a single learner like a Logistic Regressor a Decision Tree Support Vector Machine or an Artificial Neural Network feeding it data and teaching it to perform a certain task through this data. In some cases boosting has been shown to yield better accuracy than bagging but it also tends to be more likely to over-fit the training.

Boosting Ml Models To Create Strong Learners

Gradient boosting is a machine learning boosting type.

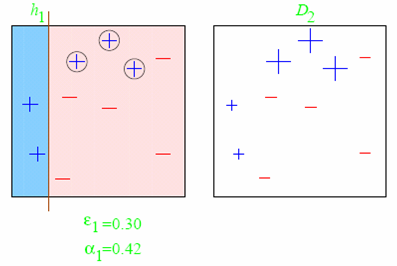

Wiki boosting machine learning. Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. Boosting involves incrementally building an ensemble by training each new model instance to emphasize the training instances that previous models mis-classified. Focusing primarily on the AdaBoost algorithm this.

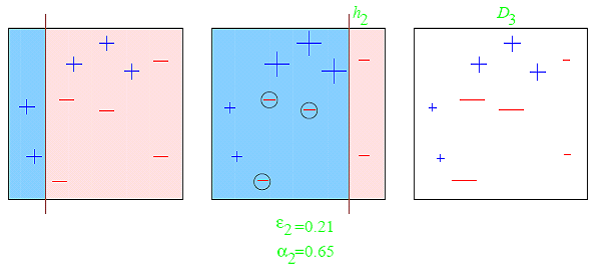

Decision tree learning or induction of decision trees is one of the predictive modelling approaches used in statistics data mining and machine learningIt uses a decision tree as a predictive model to go from observations about an item represented in the branches to conclusions about the items target value represented in the leavesTree models where the target variable can take a. 06 May 2019 Boosting is an ensemble modeling technique which attempts to build a strong classifier from the number of weak classifiers. In machine learning boosting is an ensemble meta-algorithm for primarily reducing bias and also variance in supervised learning and a family of machine learning algorithms that convert weak learners to.

It is done building a model by using weak models in series. Boosting is a method of combining many weak learners trees into a strong classifier. It strongly relies on the prediction that the next model will reduce prediction errors when blended with previous ones.

Then ensemble methods were born which involve using many learners to enhance. The main idea is to establish target outcomes for this upcoming model to minimize. The Boosting Approach to Machine Learning An Overview Robert E.

What is Boosting in Machine Learning. Boosting is a Sequential ensemble method where each consecutive model attempts to correct the errors of the previous model.

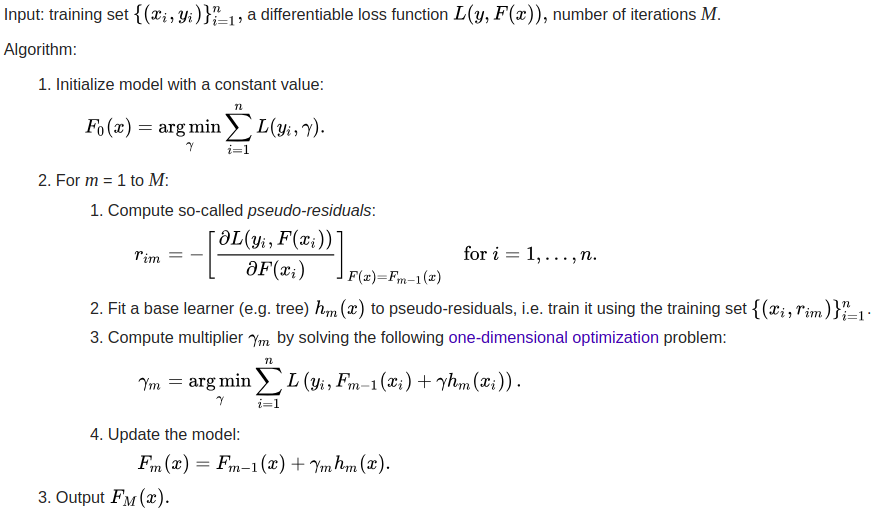

From Wikipedia the free encyclopedia. If a base classifier is misclassified in one weak model its weight will get increased and the next base learner will classify it more correctly. Gradient boosting derived from the term gradient boosting machines is a popular supervised machine learning technique for regression and classification problems that aggregates an ensemble of weak individual models to obtain a more accurate final model.

Boosting in Machine Learning Boosting and AdaBoost Last Updated.

Gradient Boost Part 1 Of 4 Regression Main Ideas Youtube

What Is Ensemble Learning Unite Ai

Machine Learning Basics And Ndt Applications Zfp Tum Wiki

Cis520 Machine Learning Lectures Boosting

Machine Learning Machine Learning Learning Machine

What Is Ensemble Learning Unite Ai

Demystifying Maths Of Gradient Boosting By Krishna Kumar Mahto Towards Data Science

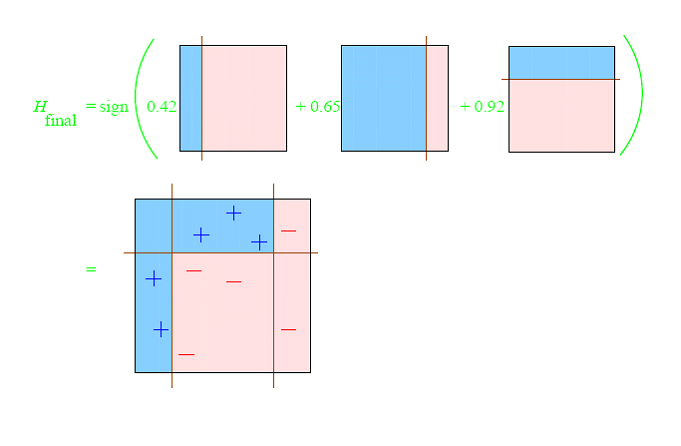

Cis520 Machine Learning Lectures Boosting

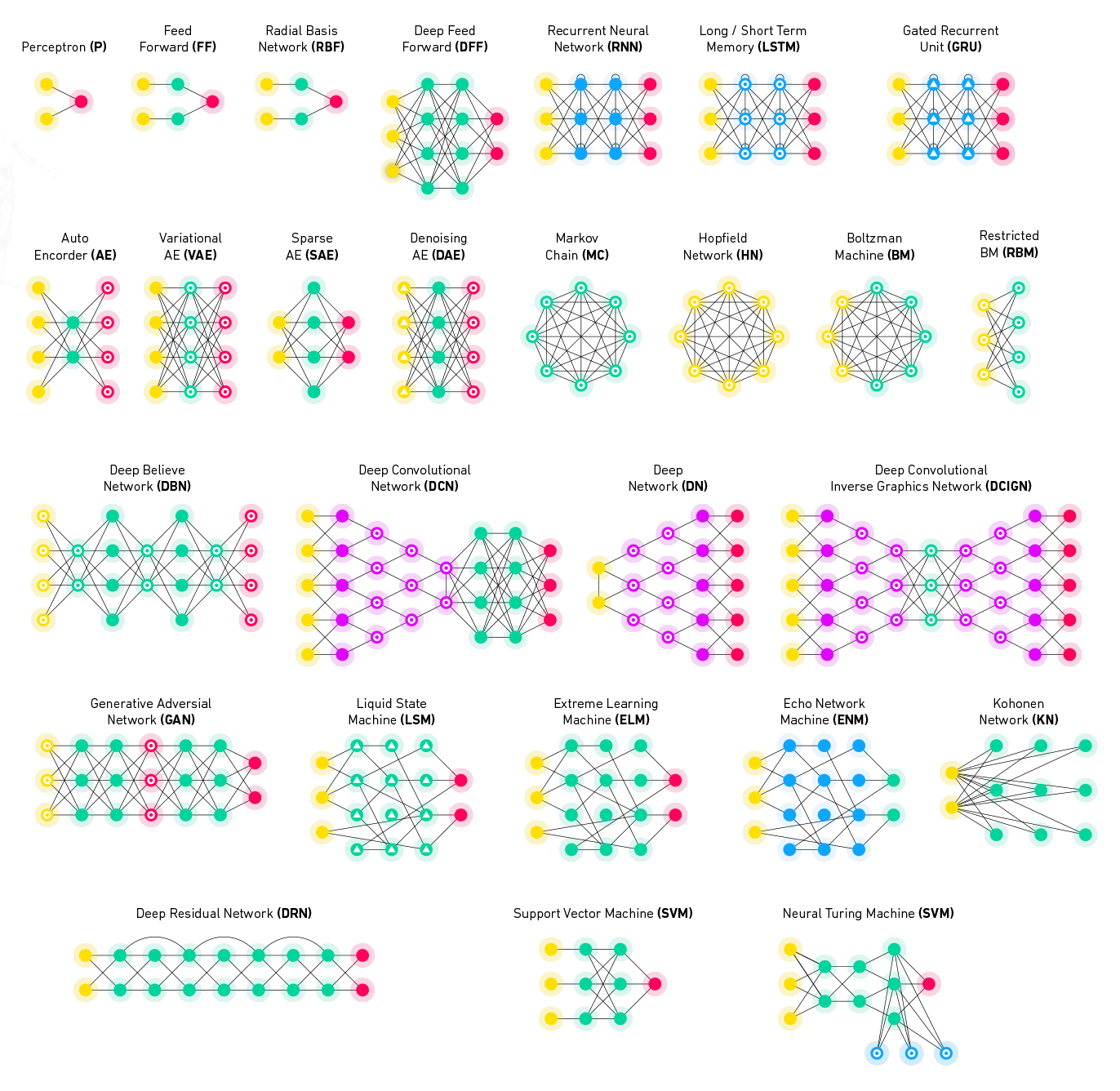

Machine Learning Models Explained Ai Wiki

Recurrent Neural Network Rnn Ai Wiki

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ensemble Learning The Heart Of Machine Learning By Ashish Patel Ml Research Lab Medium

Convolutional Neural Network Cnn Ai Wiki

Cis520 Machine Learning Lectures Boosting

Cis520 Machine Learning Lectures Boosting

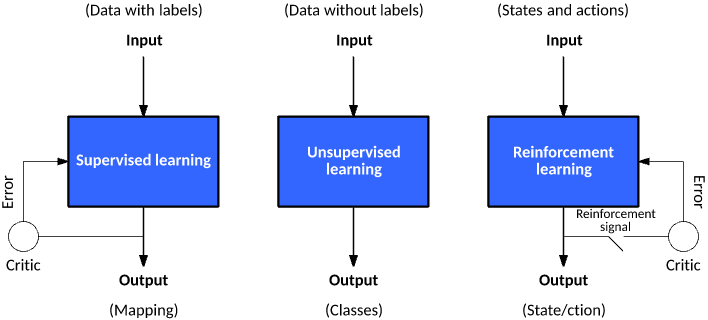

Supervised Unsupervised Reinforcement Learning Ai Wiki

Post a Comment for "Wiki Boosting Machine Learning"