Variational Autoencoder Machine Learning Mastery

An common way of describing a neural network is an approximation of some function we wish to model. They are an unsupervised learning method although technically they are trained using supervised learning methods referred to as self-supervised.

Machine Learning Mastery Jason Brownlee Generative Adversarial Networks With Python 2020 Cybernetics Cognitive Science

Data Science Stack Exchange is a question and answer site for Data science professionals Machine Learning specialists and those interested in learning more about the field.

Variational autoencoder machine learning mastery. Then well discuss variational autoencoder loss functions and that will provide us with some intuition as to how variational autoencoders. Variational autoencoders provide a principled framework for learning deep latent-variable models and corresponding inference models. The encoder compresses the input and the decoder attempts to recreate the input from the compressed version provided by the encoder.

After training the encoder model is saved and the decoder. An autoencoder is composed of an encoder and a decoder sub-models. To get an understanding of a VAE well first start from a simple network and add parts step by step.

Machine-learning deep-learning tensorflow citation vae manifold-learning variational-autoencoder von-mises-fisher nicolas hyperspherical-vae Updated Dec 1 2018 Python. With the experiments mentioned in points 4 5 and 6 we will see that the variational autoencoder is better at learning the data distribution and can generate realistic images from a normal distribution compared to the vanilla autoencoder. The idea is instead of mapping the input into a fixed vector we want to map it into a distribution.

It only takes a minute to sign up. They are typically trained as part of a broader model that attempts to recreate the input. A VAE can generate samples by first sampling from the latent space.

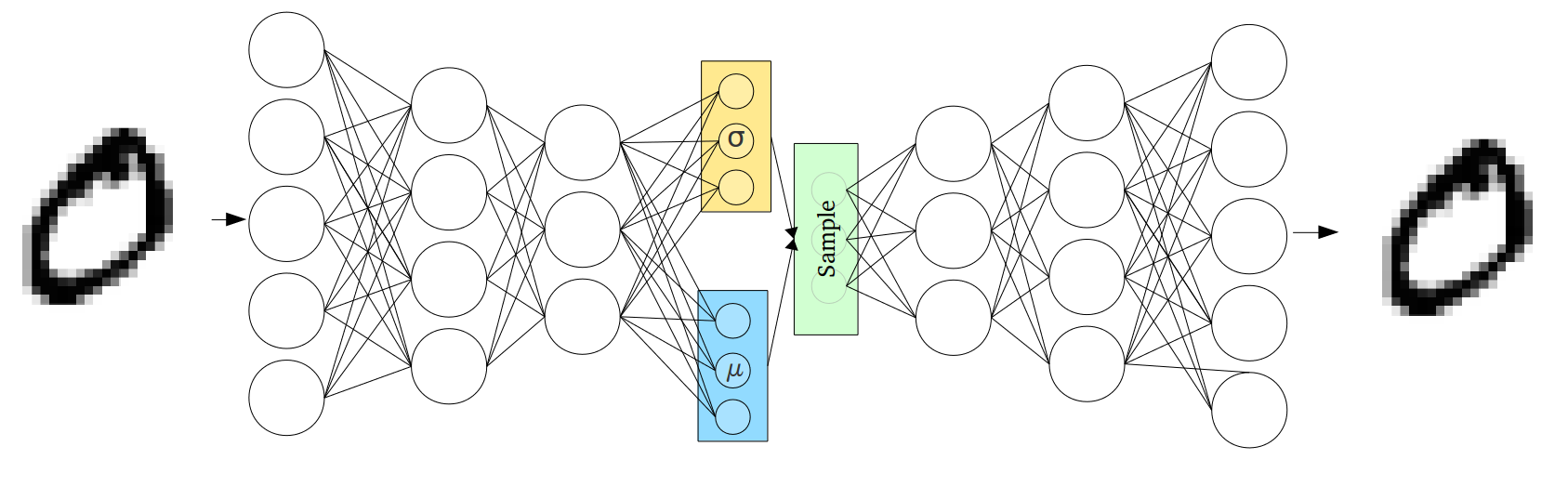

In this work we provide an introduction to variational autoencoders and some important extensions. Contrary to a normal autoencoder which learns to encode some input into a point in latent space Variational Autoencoders VAEs learn to encode multivariate probability distributions into latent space given their configuration usually Gaussian ones. We will know about some of them shortly.

In other words the encoder outputs two vectors of size. Autoencoder is a type of neural network that can be used to learn a compressed representation of raw data. Variational autoencoders VAEs are a group of generative models in the field of deep learning and neural networks.

What is a variational autoencoder. Training the Variational Autoencoder After applying the reparameterization trick. An autoencoder is a neural network model that seeks to learn a compressed representation of an input.

Their association with this group of models derives mainly from the architectural affinity with the basic autoencoder the final training objective has an encoder and a decoder but their mathematical formulation differs significantly. Variational Autoencoder VAE Its an autoencoder whose training is regularized to avoid overfitting and ensure that the latent space has good properties that enable generative process. Deep Learning is a subset of Machine Learning that has applications in both Supervised and Unsupervised Learning and is frequently used to power most of the AI applications that we use on a daily basis.

A variational autoencoder VAE is a type of neural network that learns to reproduce its input and also map data to latent space. However they can also be thought of as a data structure that holds information. Introduction to Variational Autoencoder.

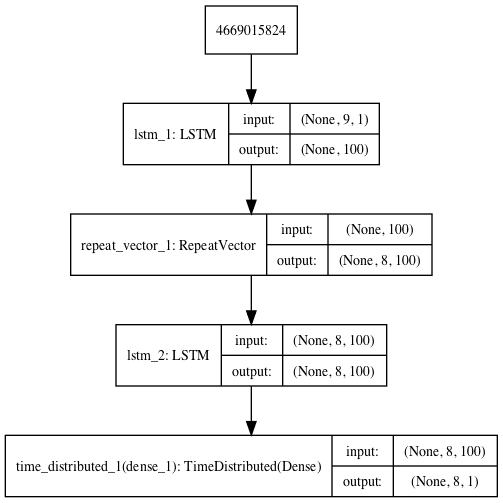

Variational autoencoders VAEs are generative models akin to generative adversarial networks. I say group because there are many types of VAEs. Variational Autoencoder TIme Series.

We will go into much more detail about what that actually means for the remainder of the article.

Autoencoder Ae Encoder Decoder Primo Ai

A Gentle Introduction To Lstm Autoencoders

Intuitively Understanding Variational Autoencoders By Irhum Shafkat Towards Data Science

How To Create A Variational Autoencoder With Keras Machinecurve

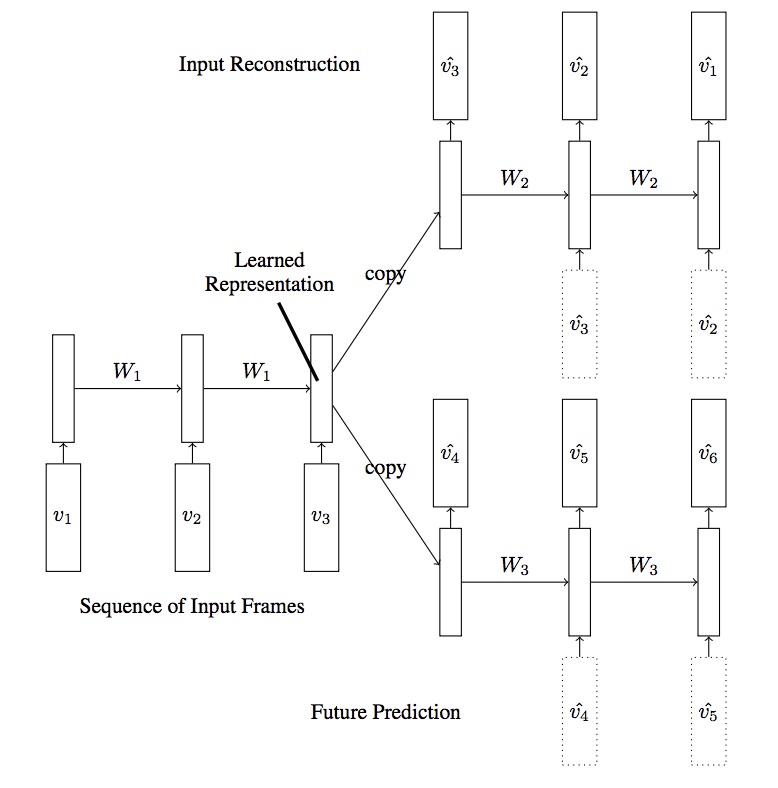

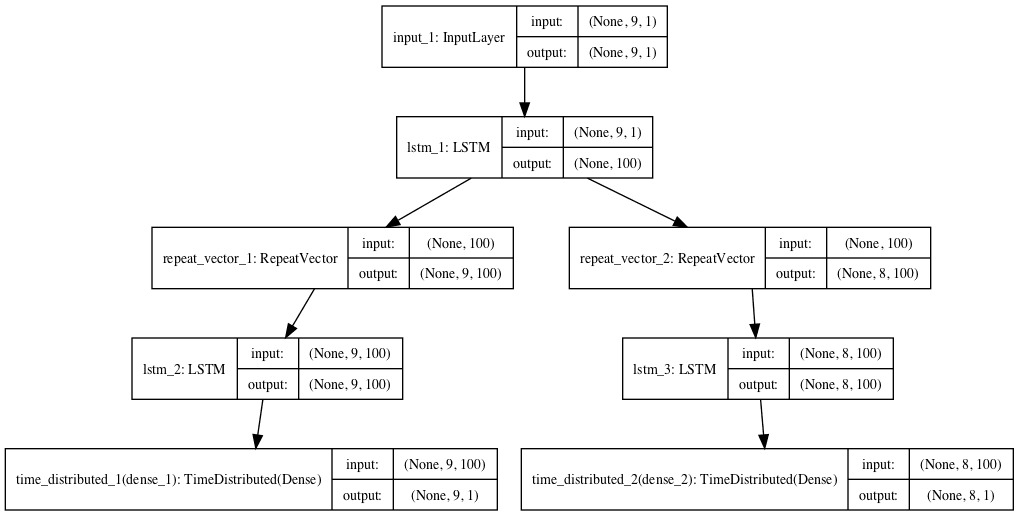

A Gentle Introduction To Lstm Autoencoders

Autoencoder Ae Encoder Decoder Primo Ai

A Gentle Introduction To Lstm Autoencoders

Day 5 Generative Adversarial Networks Autoencoders Recurrent Neural Networks Lstm Gru Sequence Learning Deep Learning With R

A Gentle Introduction To Lstm Autoencoders

How To Create A Variational Autoencoder With Keras Machinecurve

How To Create A Variational Autoencoder With Keras Machinecurve

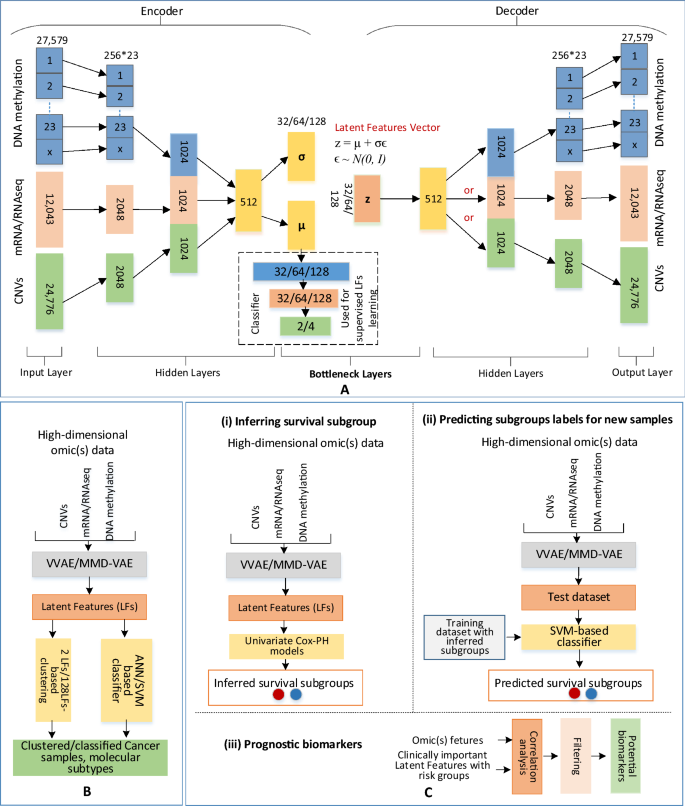

Integrated Multi Omics Analysis Of Ovarian Cancer Using Variational Autoencoders Scientific Reports

How To Create A Variational Autoencoder With Keras Machinecurve

Day 5 Generative Adversarial Networks Autoencoders Recurrent Neural Networks Lstm Gru Sequence Learning Deep Learning With R

A Gentle Introduction To Lstm Autoencoders

Day 5 Generative Adversarial Networks Autoencoders Recurrent Neural Networks Lstm Gru Sequence Learning Deep Learning With R

How To Create A Variational Autoencoder With Keras Machinecurve

Autoencoder Ae Encoder Decoder Primo Ai

Post a Comment for "Variational Autoencoder Machine Learning Mastery"