Machine Learning Loss Unit

The term convolution in machine learning is often a shorthand way of referring to either convolutional operation or convolutional layer. In a neural network the activation function is responsible for transforming the summed weighted input from the node into the activation of the node or output for that input.

A Neural Network Fully Coded In Numpy And Tensorflow Coding Matrix Multiplication Networking

Conveying what I learned in an easy-to-understand fashion is my priority.

Machine learning loss unit. Radio Frequency Machine Learning RFML in PyTorch Highlights Quick Start Installation Signal Classification AMC Evading Signal Classification FGSM PyTorch Implementation of Linear Modulations Using EVM as a Loss Function Spectral Mask as a Loss Function Executing Unit Tests Documentation Tutorial Learning Objectives Format Modules. An objective function is either a loss function or its negative in which case it is to be maximized. The rectified linear activation function or ReLU for short is a piecewise linear function that will output the input directly if it is positive otherwise it will output zero.

With some other loss formulation the loss might be higher than 05 or 1. Balraj Ashwath Jan 5. We met these properties of the activation function by multiplying the exponential linear unit ELU 7 with lambda 1 to ensure a slope larger than one for positive net inputs.

Machine Learning Glossary This is an online glossary of terms for artificial intelligence machine learning computer vision natural language processing and statistics. Hence there is no standard unit to measure a loss across all kinds of loss formulations. In mathematical optimization and decision theory a loss function or cost function is a function that maps an event or values of one or more variables onto a real number intuitively representing some cost associated with the event.

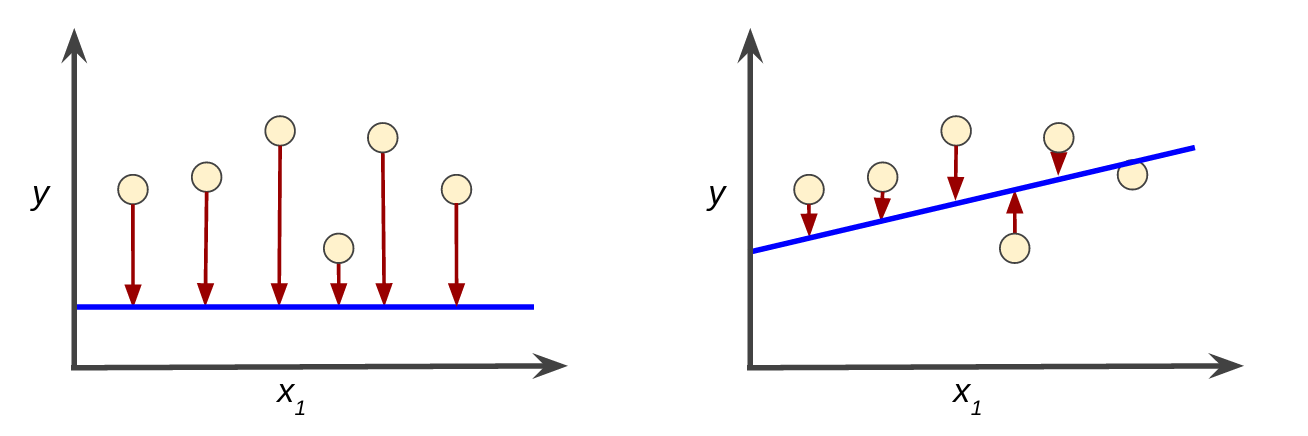

Overview of Loss Functions in Machine Learning In Machine learning the loss function is determined as the difference between the actual output and the predicted output from the model for the single training example while the average of the loss function for all the training example is termed as the cost function. In machine learning and mathematical optimization loss functions for classification are computationally feasible loss functions representing the price paid for inaccuracy of predictions in classification problems. Hello Machine learning fellasrecently during this lockdown period while I was visiting back the basic concepts of ML I gained a better intuition perspective on some very subtle concepts.

Machine learning is a pioneer subset of Artificial Intelligence where Machines learn by itself using the available dataset. Without convolutions a machine learning algorithm would have to learn a separate weight for every cell in a large tensor. Function input vs outputis calculated and backpropagation is performed where the weights are adjusted to make the loss.

Make a plot of loss and. For the optimization of any machine learning. While in machine learning we prefer the idea of minimizing costloss functions so we often define the cost function as the negative of the average log-likelihood.

In a project if real outcomes deviate from the projections then comes the loss function that will cough up a very large amount. This is my Machine Learning journey From Scratch. This process is called empirical risk.

Given X displaystyle mathcal X as the space of all possible inputs and Y 1 1 displaystyle mathcal Y-11 as the set of labels a typical goal of classification algorithms is to. It is a method of determining how well the particular algorithm models the given data. Like traditional machine learning algorithms here too.

None of the above Given below are two statements about. Introduction By means of the loss function machines learn. In statistics typically a loss.

ReLU stands for rectified linear activation unit and is used as default activation function nowadays especially in CNNs where it showed amazing performance. Cost function avglw0 w1 15 lw0 w1 15 y0logp0 1-y0log1. For example a machine learning algorithm training on 2K x 2K images would be forced.

Iii The graph of does not have oscillations i ii and iii are correct Only ii is correct Only i is correct None of the above Yes the answer is correct. In supervised learning a machine learning algorithm builds a model by examining many examples and attempting to find a model that minimizes loss. An optimization problem seeks to minimize a loss function.

13092019 Practical Machine Learning with Tensorflow - - Unit 2 - Week 1 910 1 point 17 1 point 18 ii The graph of does not have oscillations.

Estimating Or Propagating Gradients Through Stochastic Neurons For Conditional Computation Neurons Deep Learning This Or That Questions

Loss Function Loss Function In Machine Learning

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

Unit Testing Features Of Machine Learning Models Machine Learning Machine Learning Models Data Analytics

Google Introduces Rigl Algorithm For Training Sparse Neural Networks Artificialintelligence Machinelearning Algorithm Artificial Neural Network Deep Learning

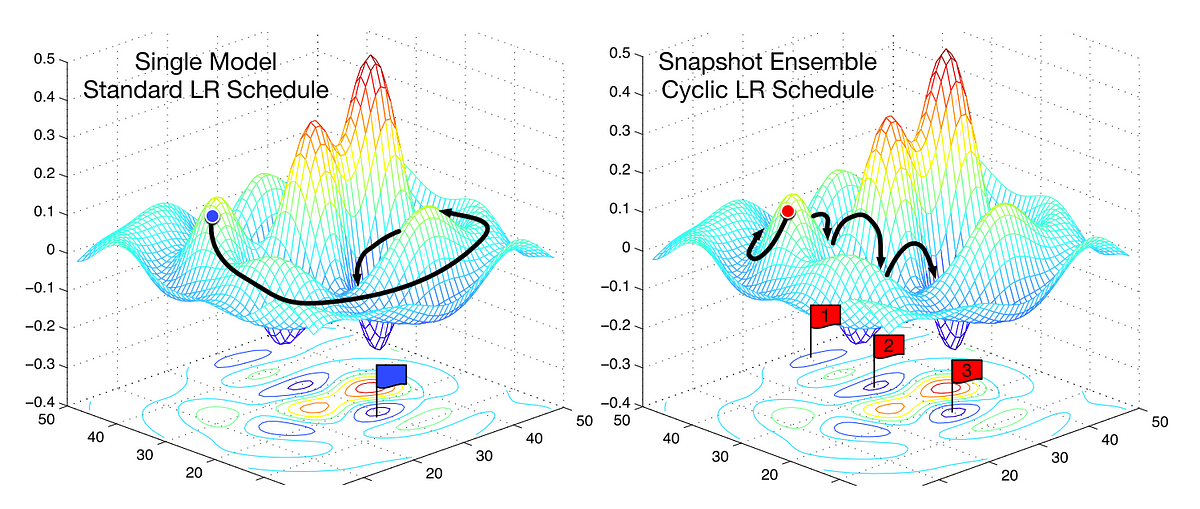

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

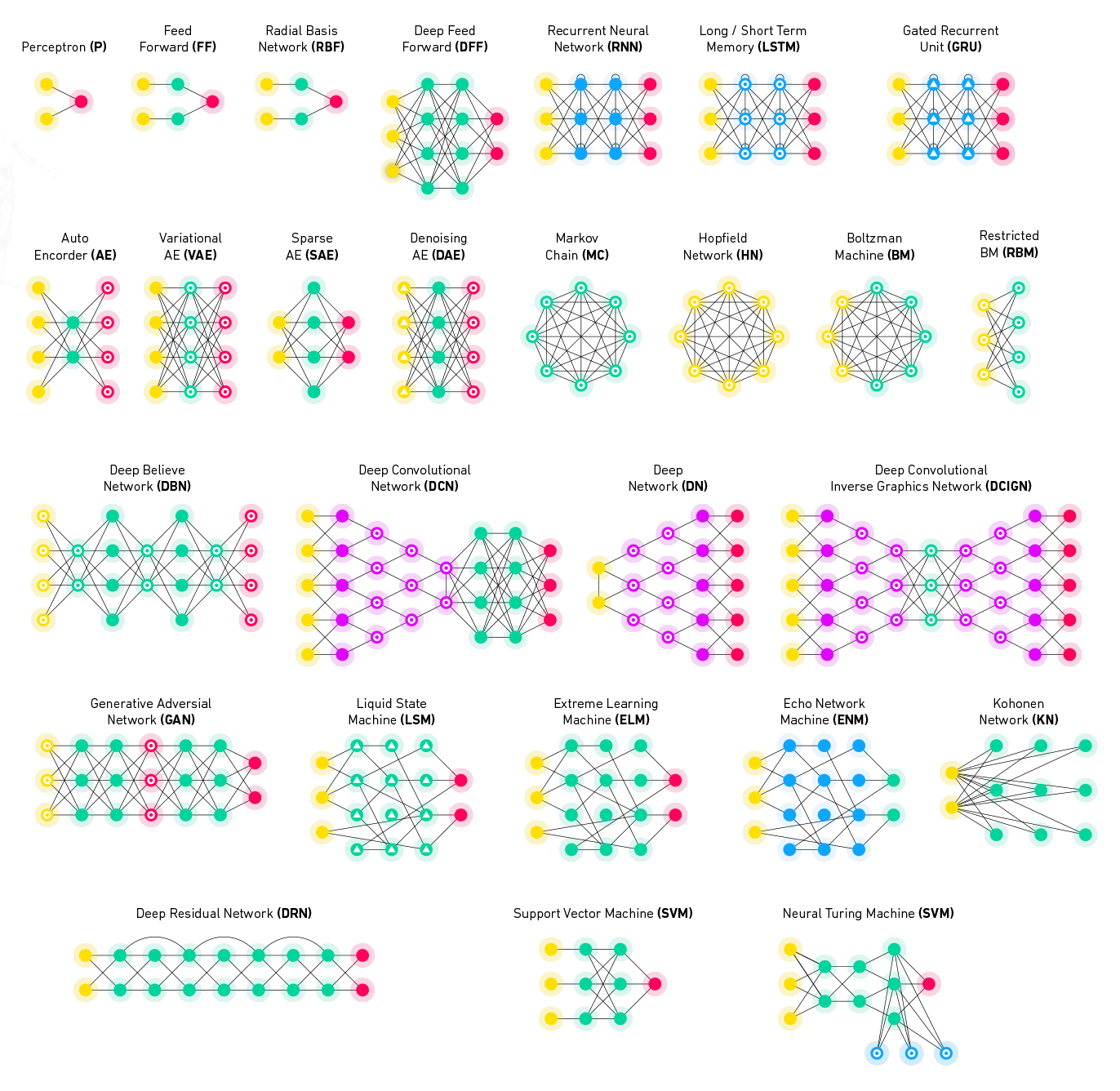

Machine Learning Models Explained Ai Wiki

10 Machine Learning Methods That Every Data Scientist Should Know By Jorge Castanon Towards Data Science

Gradient Descent In Machine Learning Machine Learning Machine Learning Deep Learning Deep Learning

Gabriel Maher Machine Learning Pie Chart Learning

Openai S Microscope Project Visualizes Popular Machine Learning Models At The Neuron Level To Enable Advances Machine Learning Models Machine Learning Neurons

Log Analytics Tools And Automating With Deep Learning Xenonstack

Keras Tuner Lessons Learned From Tuning Hyperparameters Of A Real Life Deep Learning Model Nept In 2021 Deep Learning Machine Learning Deep Learning Lessons Learned

Descending Into Ml Training And Loss Machine Learning Crash Course

Deep Dive Into Google Drive Standolone Sku Data Loss Prevention Stock Keeping Unit Machine Learning

Loss Function Loss Function In Machine Learning

Backpropagation Explained Machine Learning Deep Learning Deep Learning Machine Learning

Loss Function Machine Learning Deep Learning Mobile Application Development

Post a Comment for "Machine Learning Loss Unit"