Entropy Machine Learning Wiki

The true probability is the true label and the given distribution is the predicted value of the current model. Minimize the cross-entropy between this distribution and a.

Information Entropy Video Khan Academy

The parameters are as follows.

Entropy machine learning wiki. Machine Learning is one of the most highly demanded technology that everybody wants to learn and most of the companies require highly skilled Machine Learning engineers. Advances in the field of machine learning algorithms that adjust themselves when exposed to data are driving progress more widely in AI. The method approximates the optimal importance sampling estimator by repeating two phases.

Log loss and cross-entropy are slightly different depending on the context but in machine learning when calculating error rates between 0 and 1 they resolve to the same thing. As a demonstration where p and q are the sets p y 1y and q ŷ 1ŷ we can rewrite cross-entropy as. Machine Learning is a Computer Science domain which provides the ability to computers to learn without being explicitly programmed.

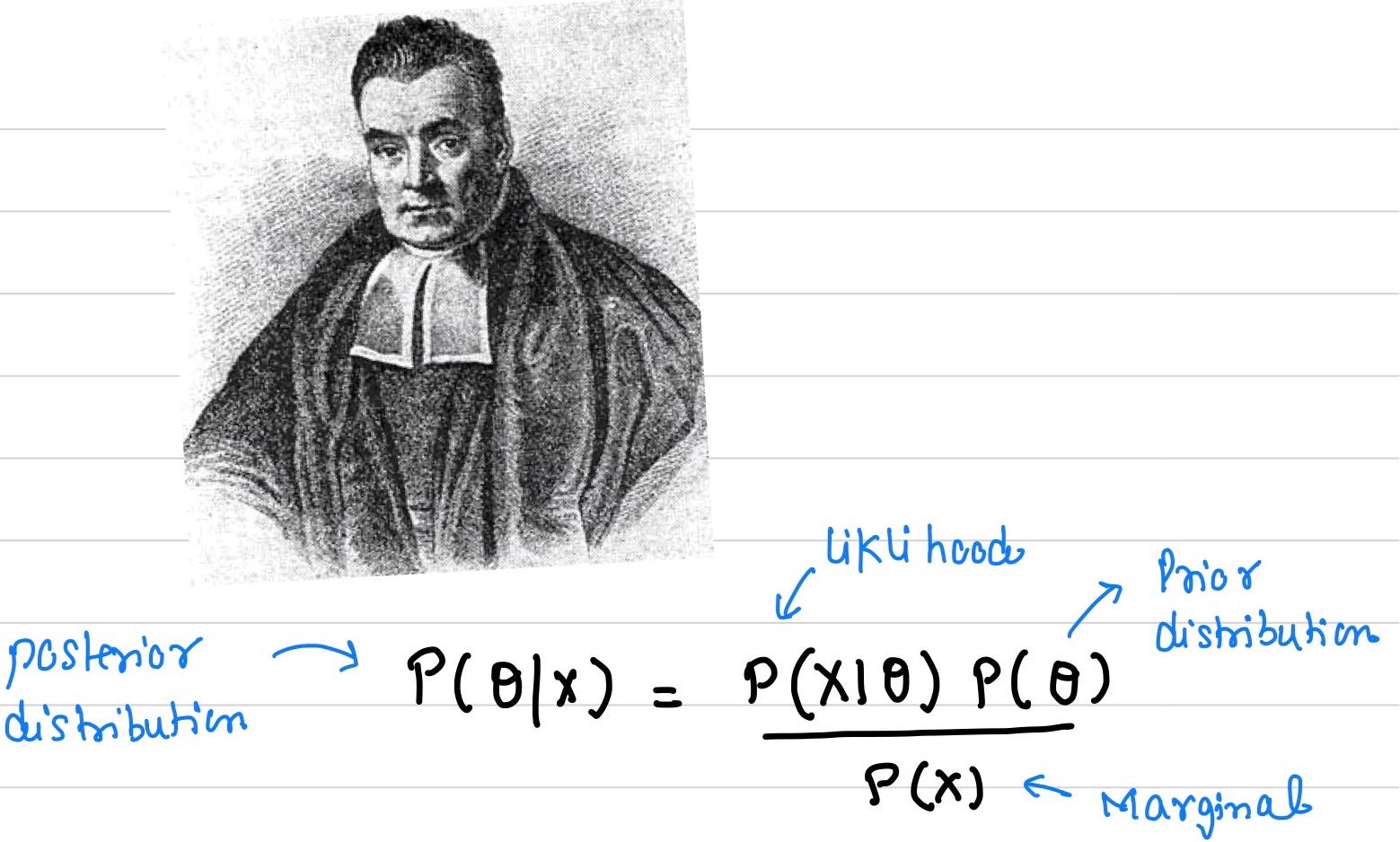

Cross-entropy is commonly used in machine learning as a loss function. The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy in the context of precisely stated prior data such as a proposition that expresses testable information. Cross-entropy can be used to define a loss function in machine learning and optimization.

It is applicable to both combinatorial and continuous problems with either a static or noisy objective. Another way of stating this. Take precisely stated prior data or testable information about a probability.

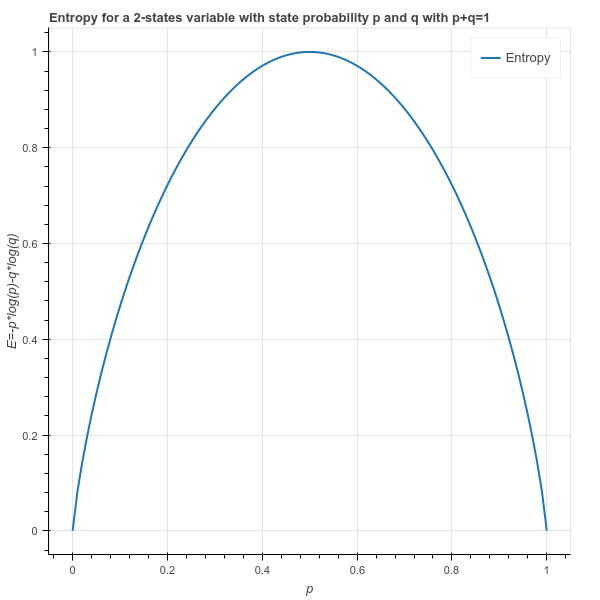

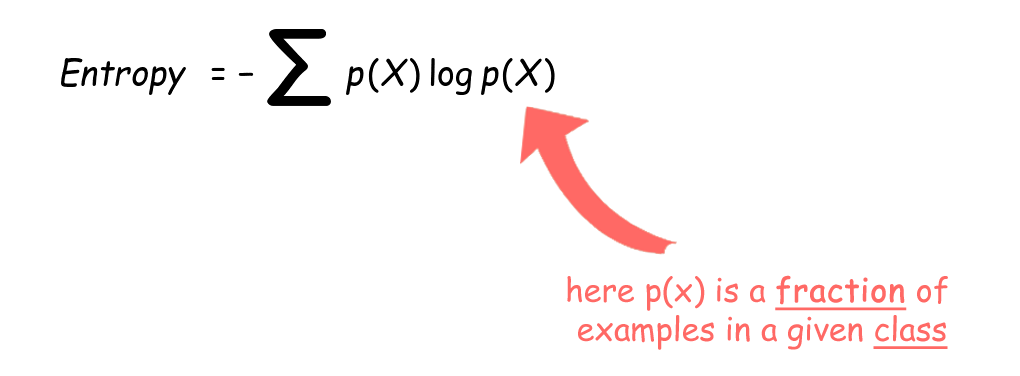

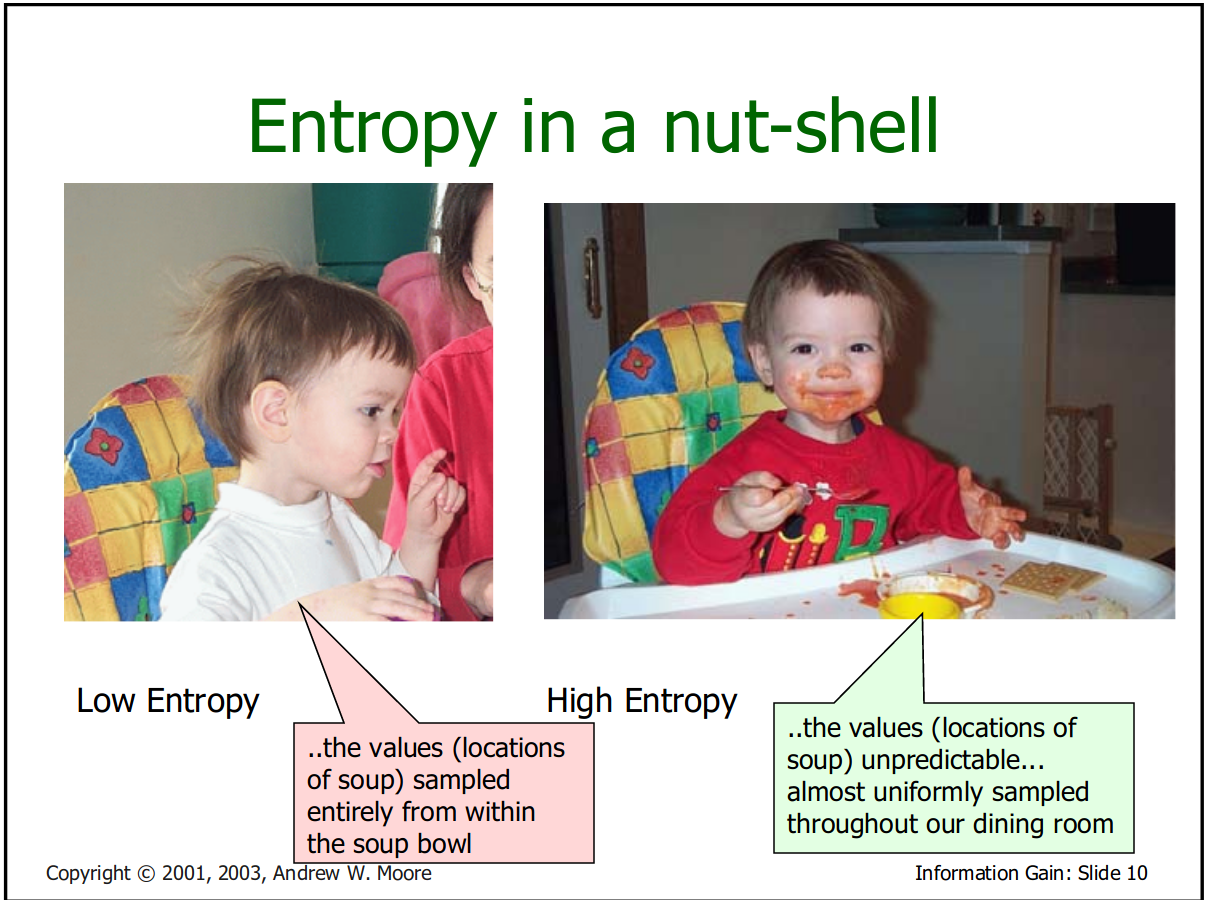

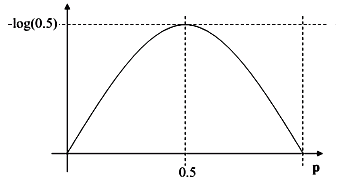

In the context of machine learning it is a measure of error for categorical multi-class classification problems. In short entropy measures the amount of surprise or uncertainty inherent to a random variable. Pathminds artificial intelligence wiki is a beginners guide to important topics in AI machine learning and deep learning.

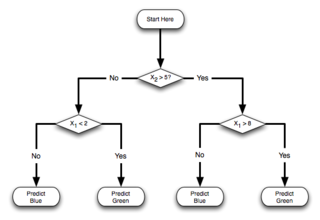

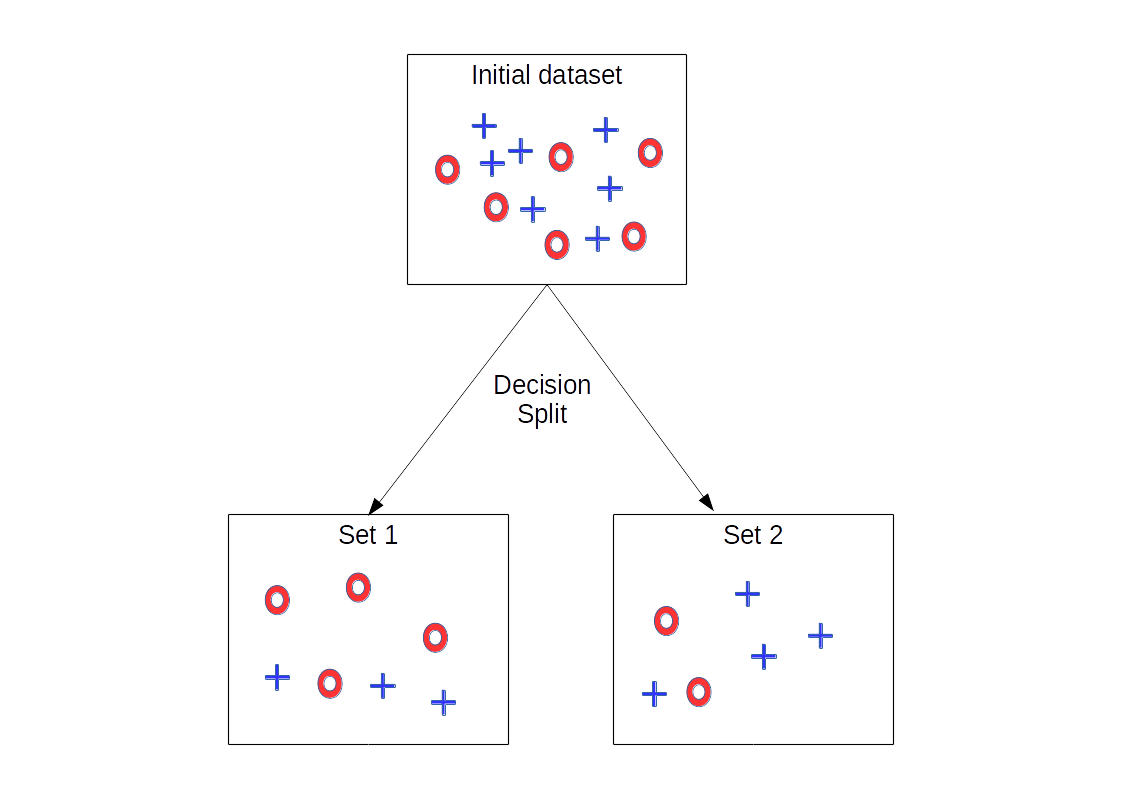

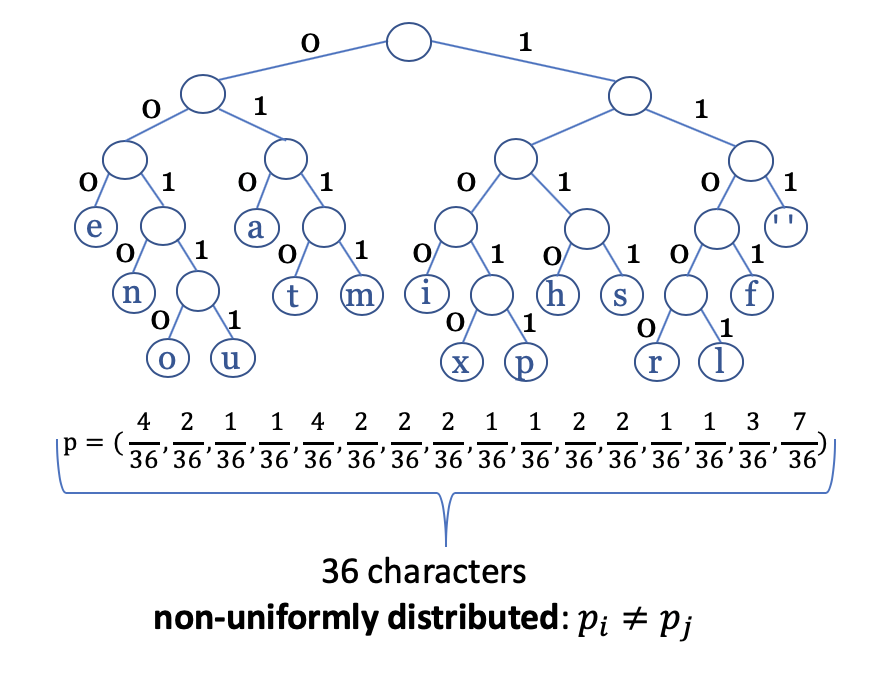

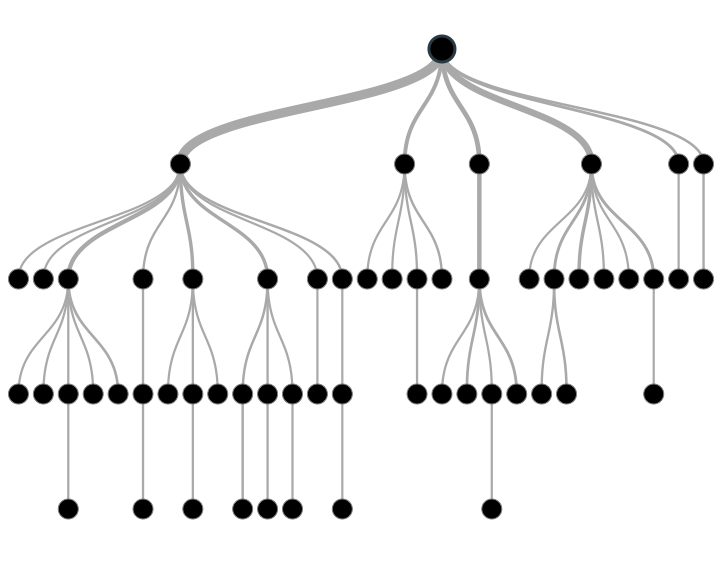

Draw a sample from a probability distribution. Formally if a random variable X is distributed according to a. From the construction of decision trees to the training of deep neural networks entropy is an essential measurement in machine learning.

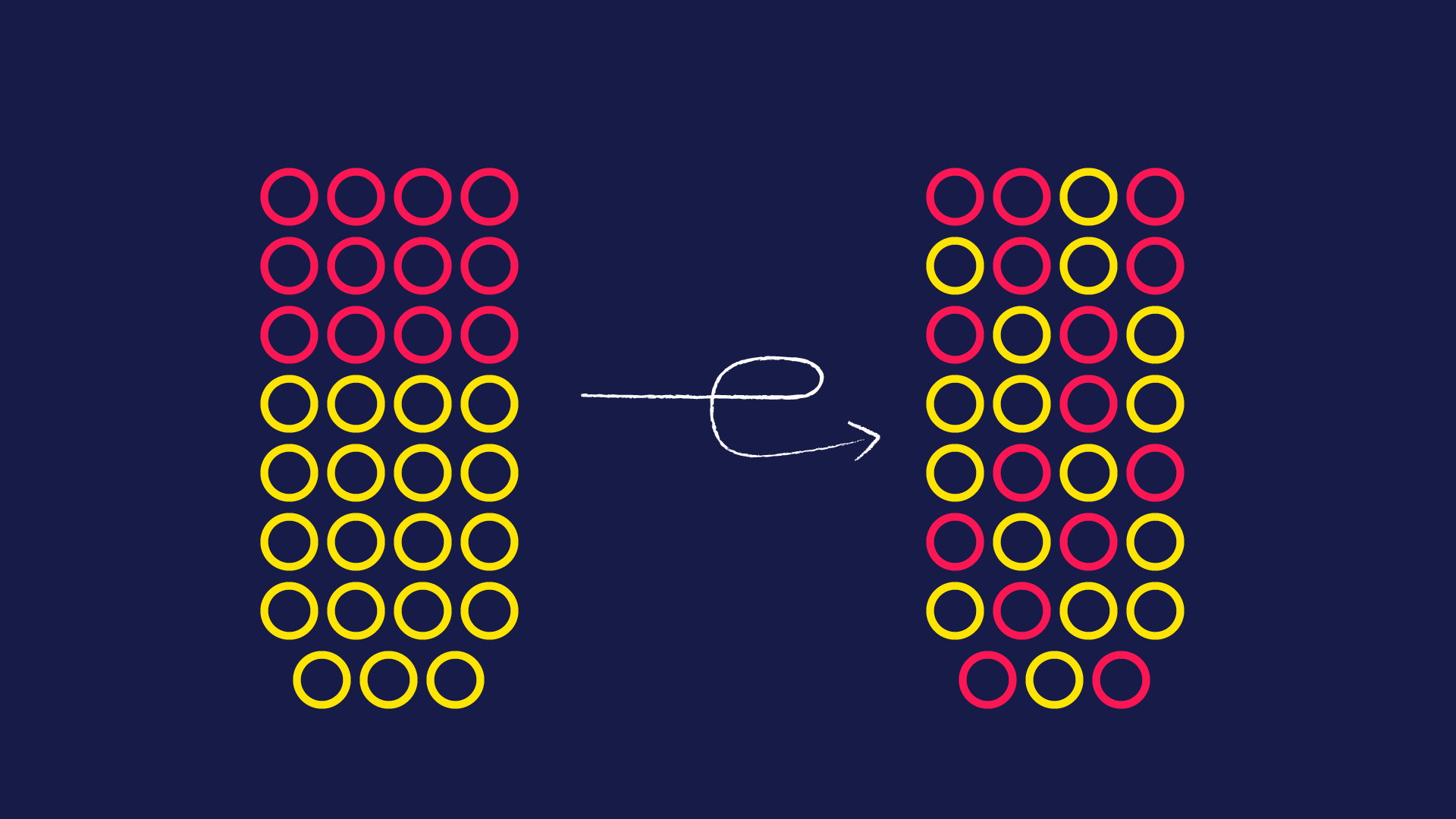

Cross-entropy loss function and logistic regression. The Golden Measurement of Machine Learning From Decision Trees to Neural Networks The State of the Art in Machine Learning Sign up for our newsletter. Cross-entropy is a measure from the field of information theory building upon entropy and generally calculating the difference between two probability distributions.

Def entropy_ml X ClassifierClass n_splits5 verboseTrue compare0 compare_methodgrassberger base2True eps1e-8 kwargs which uses a generic classifier class with an scikit-learn like API. The goal is to give readers an intuition for how powerful new algorithms work and how they are used along with code examples where possible. The cross-entropy method is a Monte Carlo method for importance sampling and optimization.

Cross-entropy is commonly used to quantify the difference between two probability distributions. It appears everywhere in machine learning.

File Entropy Guided Transformation Learning Jpg Wikipedia

Entropy Regularization Explained Papers With Code

Lecture 4 Decision Trees 2 Entropy Information Gain Gain Ratio

Cis520 Machine Learning Lectures Decisiontrees

A Simple Explanation Of Entropy In Decision Trees Benjamin Ricaud Data Networks Learning

Classification Loss Cross Entropy By Eric Ngo Analytics Vidhya Medium

What Is Entropy And Why Information Gain Matter In Decision Trees By Nasir Islam Sujan Coinmonks Medium

What Is Entropy And Why Information Gain Matter In Decision Trees By Nasir Islam Sujan Coinmonks Medium

Decision Trees For Machine Learning

Decision Tree Classifier Entropy Youtube

The Answer Is 42 With A Pinch Of Salt Information Entropy By Pradeep Medium

But What Is Entropy Gain Intuitive And Mathematical By Kapil Sachdeva Towards Data Science

A Simple Explanation Of Entropy In Decision Trees Benjamin Ricaud Data Networks Learning

Post a Comment for "Entropy Machine Learning Wiki"