Hyperparameter Tuning Methods In Machine Learning

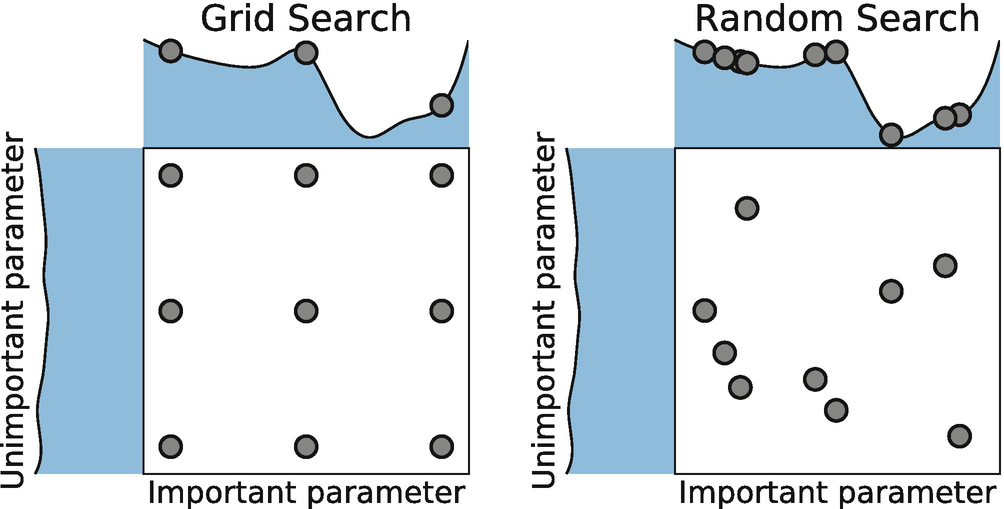

Hyperparameter tuning Tuning process. An underfitting model is not powerful enough to fit the underlying complexities of the data distributions.

Underfitting is when the machine learning model is unable to reduce the error for either the test or training set.

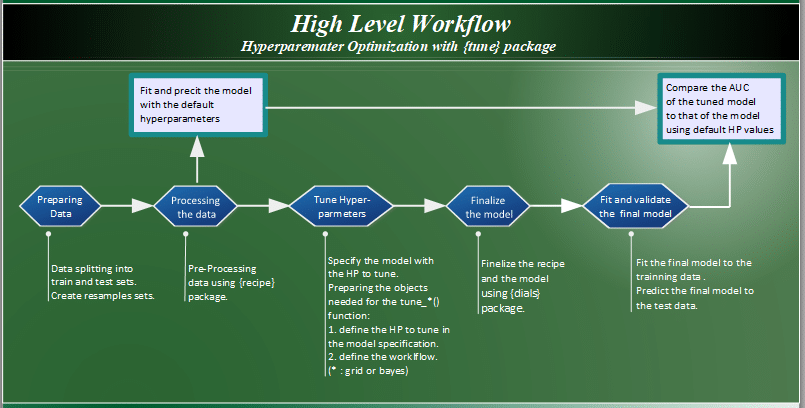

Hyperparameter tuning methods in machine learning. Selecting the right machine learning model and the corresponding correct set of hyperparameters is essential to train a robust machine learning model. To familiarize yourself with the concept of Batch Normalization. Define the search space Tune hyperparameters by exploring the range of values defined for each hyperparameter.

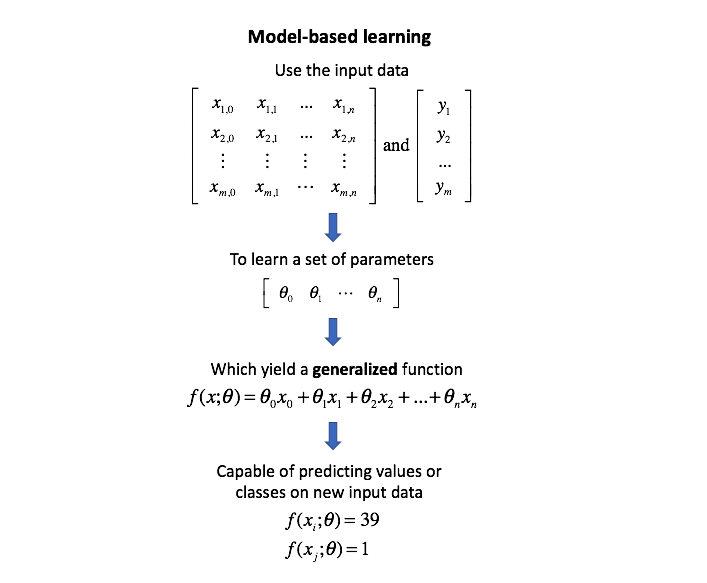

Every machine learning models will have different hyperparameters that can be set. Here we explored three methods for hyperparameter tuning. Hyperparameters are different from parameters which are the internal coefficients or weights for a model found by the learning algorithm.

An example of hyperparameters in the Random Forest algorithm is the number of estimators n_estimators maximum depth max_depth and criterion. The end outcome can be fewer evaluations of the objective function and better generalization performance on. To master the process of hyperparameter tuning.

Tune Model Hyperparameters can only be connect to built-in machine learning algorithm modules and cannot support customized model built in Create Python Model. Hyperparameter tuning for Deep Learning with scikit-learn Keras and TensorFlow next weeks post Easy Hyperparameter Tuning with Keras Tuner and TensorFlow tutorial two weeks from now Last week we learned how to tune hyperparameters to a Support Vector Machine SVM trained to predict the age of a marine snail. The performance of the machine learning model improves with hyperparameter tuning.

Hyperparameters tuning is crucial as they control the overall behavior of a machine learning model. While this is an important step in modeling it is by no means the only way to improve performance. Machine learning algorithms have hyperparameters that allow you to tailor the behavior of the algorithm to your specific dataset.

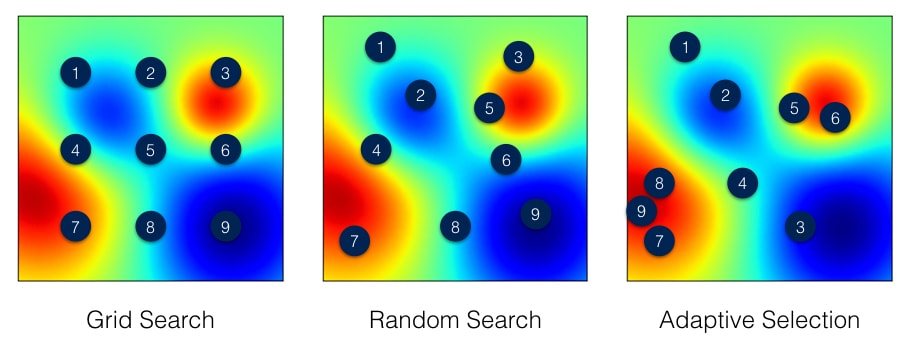

In short hyperparameters are different parameter values that are used to control the learning process and have a significant effect on the performance of machine learning models. Azure Machine Learning lets you automate hyperparameter tuning and run experiments in parallel to efficiently optimize hyperparameters. This article covers the comparison and implementation of random search grid search and Bayesian optimization methods using Sci-kit learn and HyperOpt libraries for hyperparameter tuning of the machine learning model.

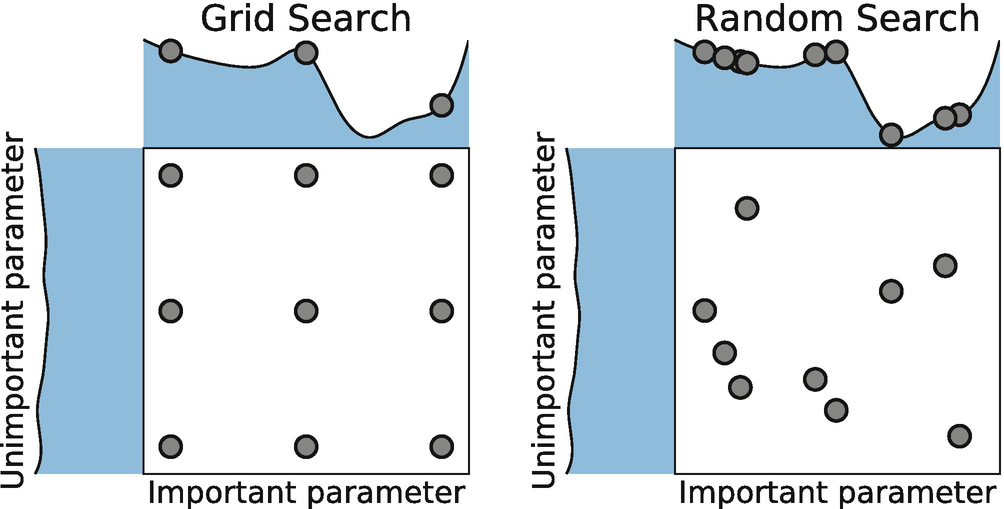

Hyperparameter tuning is an important part of the machine learning pipeline most common implementations uses a grid search random or not to. The outcome of hyperparameter tuning is the best hyperparameter setting and the outcome of model training is the best model parameter setting. Much like the first module this is further divided into three sections.

Up to 5 cash back Hyperparameter tuning is a meta-optimization task. I hope this article helps you to use pythons inbuilt grid search function for hyperparameter tuning. In contrast to random search Bayesian optimization chooses the next hyperparameters in an informed method to spend more time evaluating promising values.

Model Selection and Hyperparameter Tuning. If you enjoyed this explanation about hyperparameter tuning and wish to learn more such concepts join Great Learning Academy s free courses today. As Figure 4-1 shows each trial of a particular hyperparameter setting involves training a modelan inner optimization process.

The hyper-parameter tuning process is a tightrope walk to achieve a balance between underfitting and overfitting. Hyperparameters refer to the parameters that the model cannot learn and need to be provided before training. Add the dataset that you want to use for training and connect it to the middle input of Tune Model Hyperparameters.

Automated hyperparameter tuning of machine learning models can be accomplished using Bayesian optimization. This can also be used for more complex scenarios such as clustering with predefined cluster sizes varying epsilon value for optimizations etc.

An Intro To Hyper Parameter Optimization Using Grid Search And Random Search By Elyse Lee Medium

Hyper Parameter Tuning Techniques In Deep Learning By Javaid Nabi Towards Data Science

Hyperparameters Tuning Using Gridsearchcv And Randomizedsearchcv

Hyperparameter Tuning For Machine Learning Models

Hyperparameters Optimization Pier Paolo Ippolito

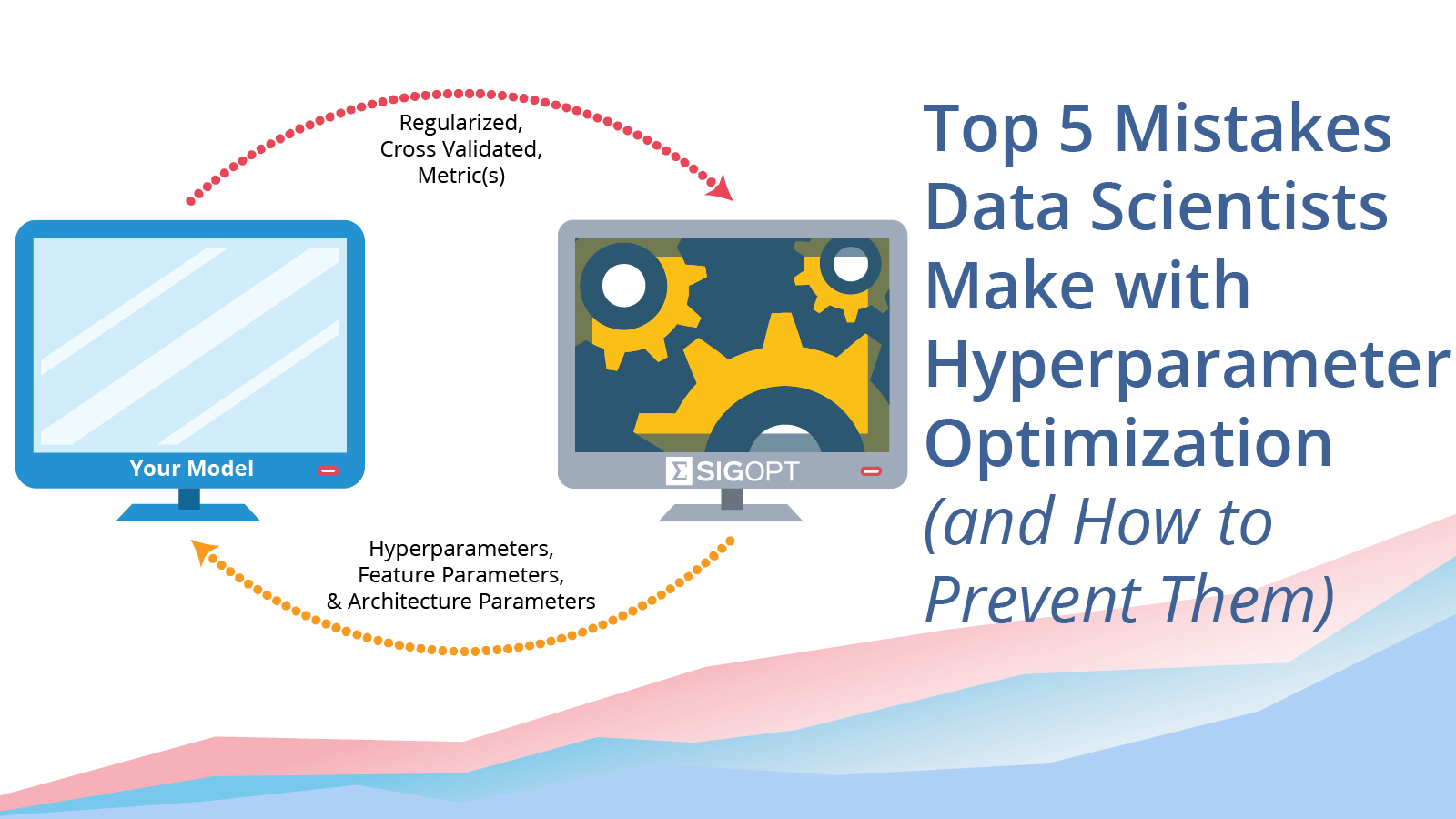

Top 5 Mistakes Data Scientists Make With Hyperparameter Optimization And How To Prevent Them By Alexandra Johnson Medium

Challenges In Applying Optimization To Hyperparameter Tuning Download Scientific Diagram

4 Hyperparameter Tuning Evaluating Machine Learning Models Book

How To Do Hyperparameter Tuning On Any Python Script In 3 Easy Steps Neptune Ai

Hyperparameter Tuning Platforms Are Becoming A New Market In The Deep Learning Space By Jesus Rodriguez Hackernoon Com Medium

Hyperparameter Optimization With Scikit Learn Scikit Opt And Keras By Luke Newman Towards Data Science

3 1 Hyperparameter Tuning Mlr3 Book

Hyperparameter Tuning For Machine Learning Models Akira Ai

Scanning Hyperspace How To Tune Machine Learning Models Cambridge Coding Academy

Introduction To Model Hyperparameter And Tuning In Machine Learning Analytics Steps

Understanding Hyperparameters And Its Optimisation Techniques By Prabhu Towards Data Science

Hyperparameter Definition Deepai

Grid Search And Bayesian Hyperparameter Optimization Using Tune And Caret Packages Datascience

Massively Parallel Hyperparameter Optimization Machine Learning Blog Ml Cmu Carnegie Mellon University

Post a Comment for "Hyperparameter Tuning Methods In Machine Learning"