Machine Learning Loss Not Converging

If we dont scale the data the level curves contours would be narrower and taller which means it would take longer time to converge see figure 3. Delve into the data science behind logistic regression.

Uber Ai Labs Proposes Loss Change Allocation Lca A New Method That Provides A Rich Window Into The Neural Network Training Process Networking Loss Train

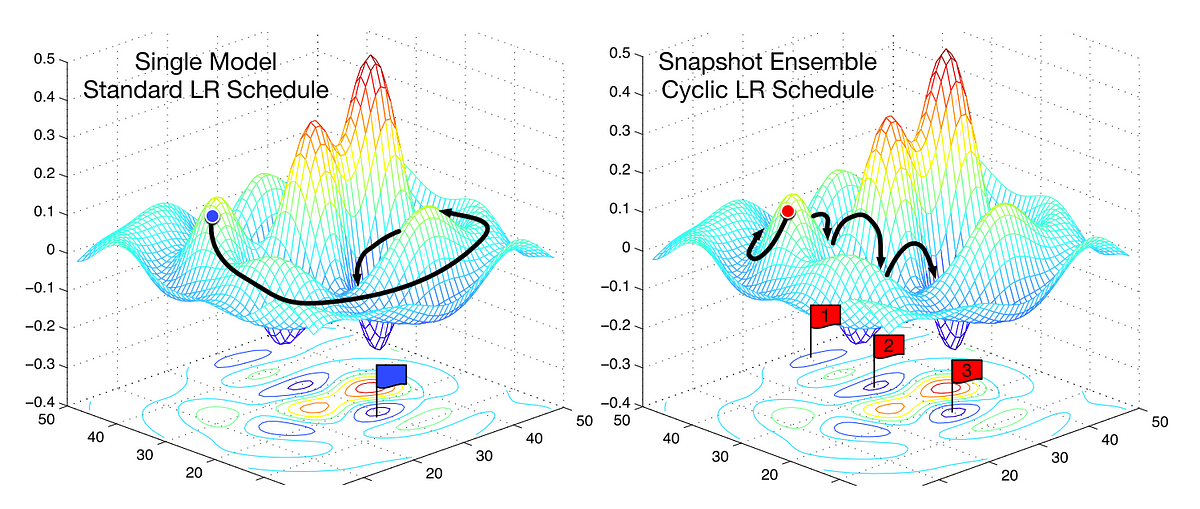

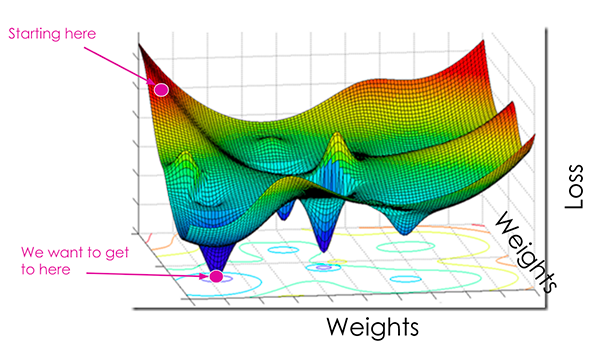

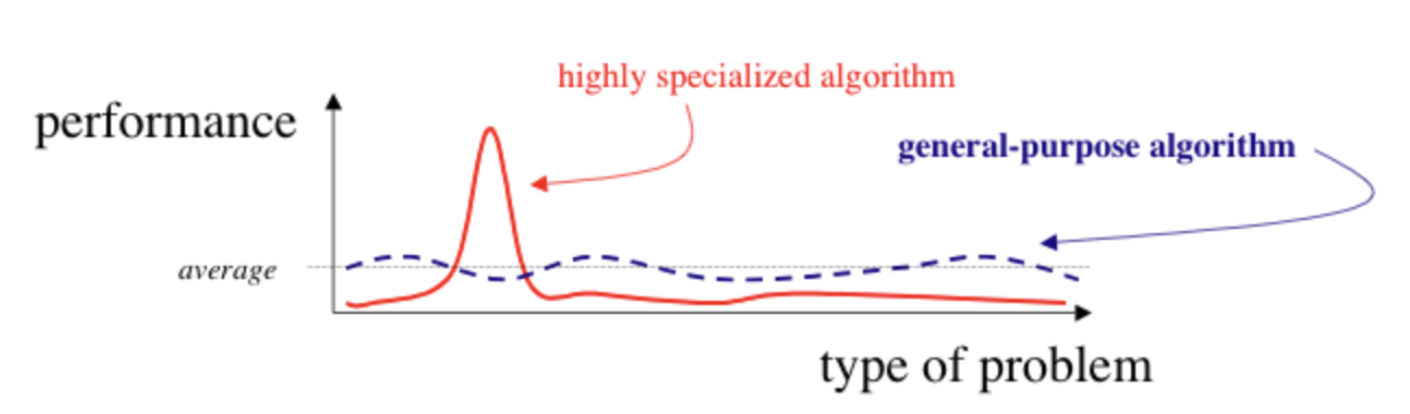

Knowing the kind of loss surface we are dealing with can tremendously help us design and train our models.

Machine learning loss not converging. To converge in machine learning is to have an error so close to localglobal minimum or you can see it aa having a performance so clise to localglobal minimum. You need to tone down some of the numbers that might be causing such a large initial loss and maybe also make the weight updates smaller. A help will be much appreciated.

When my network doesnt learn I turn off all regularization and verify that the non-regularized network works correctly. Reduce the learning rate -. In other words a model converges when additional training will not improve the model.

A machine learning model reaches convergence when it achieves a state during training in which loss settles to within an error range around the final value. How to identify a convergence failure by reviewing learning curves of generator and discriminator loss over time. I have seen in many machine learning papers and talks people refer to loss convergence.

3 and its not converging to 0. When the model converges there is usually no significant error decrease performance increase anymore. I am super new to deep learning and I dont know much about it.

A model is said to converge when the series sn loss_w_nhat y y Where w_n is the set of weights after the n th iteration of back-propagation and sn is the n th term of the series is a converging series. The label for this dataset has two possible values. If one use naive backpropagation then these parameters are learning rate and momentum.

The autoencoder is trying to match the input and if the numbers are large here this. Playground is a program developed especially for this course to teach machine learning principles. Here we present a comprehensive analysis of logistic regression which can be used as a guide for beginners and advanced.

Make sure to scale the data if its on a very different scales. However at the time that your network is struggling to decrease the loss on the training data -- when the network is not learning -- regularization can obscure what the problem is. This is the first of several Playground exercises.

0001 0003 001 003 01 03. The most commonly used rates are. Loss not Converging for CNN Model.

Learning Rate and Convergence. Each Playground exercise generates a dataset. The series is of course an infinite series only if you assume that loss 0 is never actually achieved and that learning rate keeps getting smaller.

Download the entire modeling process with this Jupyter Notebook. Normalise your input data. U n d e rstanding the loss surface of your objective function is the one of the most essential and fundamental concepts in machine learning.

Gradient descent with different learning ratesSource. This is because the ability to train a model is precisely the ability to minimize a loss function. I assume they refer to loss on development set but what I am not sure about is that if they mean when they run the training code at each epoch of the training procedure the loss drops Or they mean if they run their training code several times it always ends up at the same loss value on.

Logistic regression alongside linear regression is one of the most widely used machine learning algorithms in real production settings. Follow edited Apr 25 20 at 1435. 1 If the problem is only convergence not the actual well trained network which is way to broad problem for SO then the only thing that can be the problem once the code is ok is the training method parameters.

Kick-start your project with my new book Generative Adversarial Networks with Python including step-by-step tutorials and the Python source code files for all examples. Machine-learning deep-learning computer-vision pytorch conv-neural-network.

Loss Function Loss Function In Machine Learning

Will Big Data Influence Artificial Intelligence As A Major Disruption Machine Learning Deep Learning Big Data Artificial Intelligence

Training Loss Goes Down And Up Again What Is Happening Cross Validated

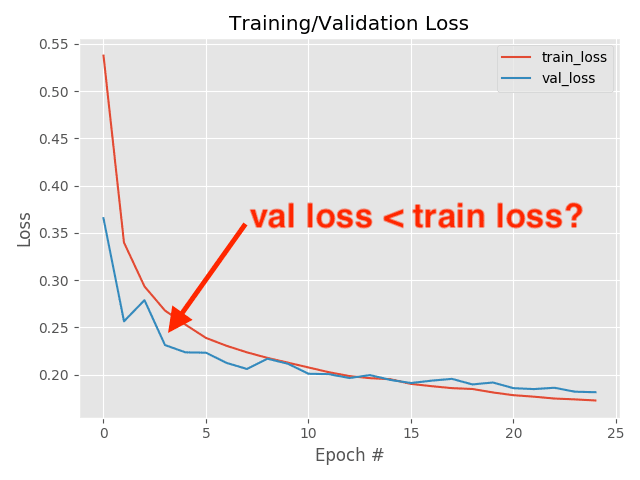

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Foundations Of Data Science Book By Avrim Blum John Hopcroft And Ravindran Kannan Free Download Data Science Science Books Science

Training Loss Increases With Time Cross Validated

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Gradient Descent In Practice Ii Learning Rate Coursera Machine Learning Learning Online Learning

Noisy Training Loss Stack Overflow

Reinforcement Learning Decreasing Loss Without Increasing Reward Data Science Stack Exchange

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Reference To Learn How To Interpret Learning Curves Of Deep Convolutional Neural Networks Cross Validated

Interpreting Loss Curves Testing And Debugging In Machine Learning

Loss Accuracy Are These Reasonable Learning Curves Stack Overflow

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

Understanding And Reducing Bias In Machine Learning By Jaspreet Towards Data Science

Post a Comment for "Machine Learning Loss Not Converging"