Machine Learning Epoch Meaning

Datasets are usually grouped into batches especially when the amount of data is very large. One epoch one forward pass and one backward pass of all the training examples in the neural network terminology.

What Is Epoch And How To Choose The Correct Number Of Epoch By Upendra Vijay Medium

In the paper you mention they seem to be more flexible regarding the meaning of epoch as they just define one epoch as being a certain amount of weight updates.

Machine learning epoch meaning. Since one epoch is too big to feed to the computer at once we divide it in several smaller batches. An epoch elapses when an entire dataset is passed forward and backward through the neural network exactly one time. Epoch machine learning Dr Daniel J Bell and Assoc Prof Frank Gaillard et al.

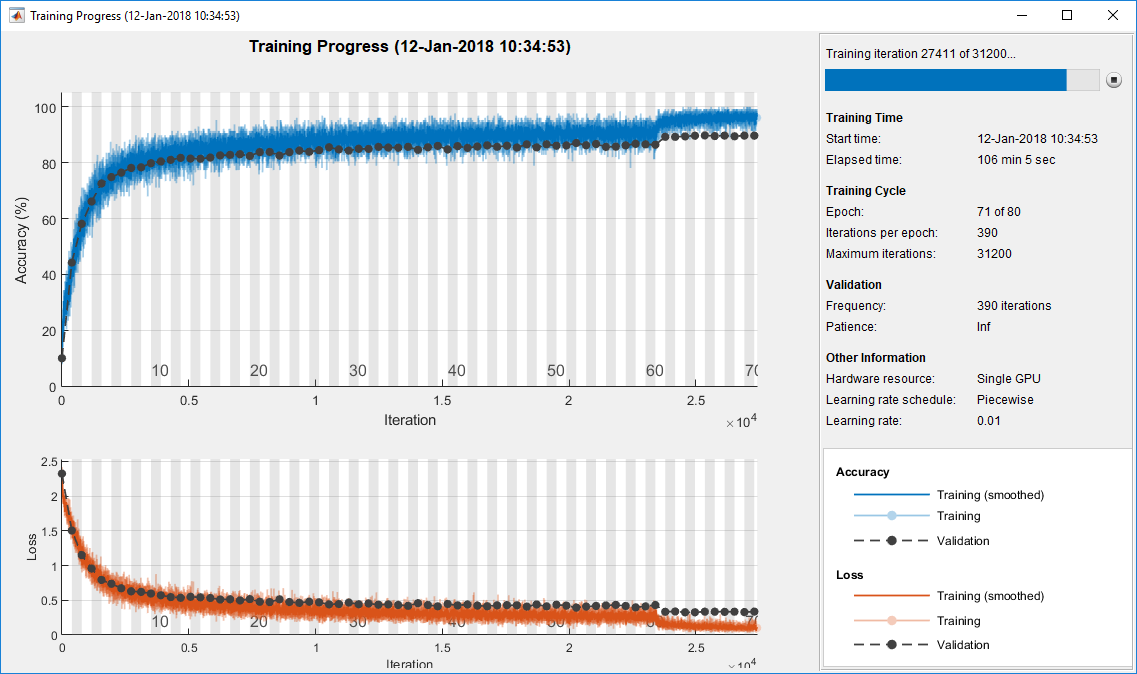

It is typical to train a deep neural network for multiple epochs. The training data can be split into batches for enhanced computations. A loss is a number indicating how bad the models prediction was on a single example.

If the entire dataset cannot be passed into the algorithm at once it must be divided into mini-batches. In this example we will use batch gradient descent meaning that the batch size will be set to the size of the training dataset. In the context of machine learning an epoch is one complete pass through the training data.

Some people use the term iteration loosely and refer to putting one. Otherwise the loss is greater. The goal of training a model is to find a set of weights.

So far as i understand it an epoch as runDOSrun is saying is a through use of all in the TrainingSet not DataSet. One epoch means that each sample in the training dataset has had an opportunity to update the internal model parameters. One Epoch is when an ENTIRE dataset is passed forward and backward through the neural network only ONCE.

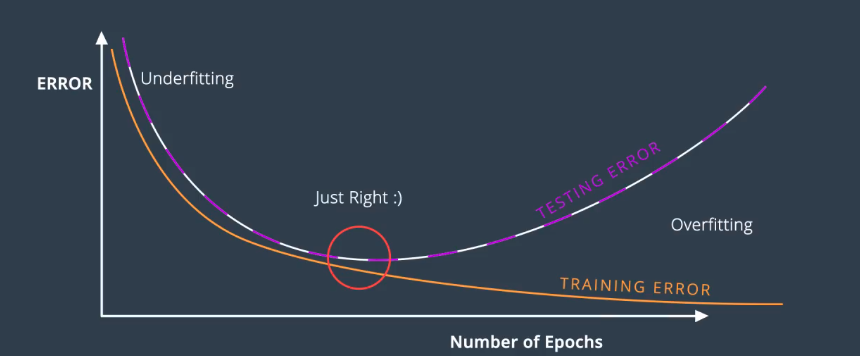

Learning machines like feed forward neural nets that utilize iterative algorithms often need many epochs during their learning phase. The tendency to search for interpret favor and recall information in a way that confirms ones preexisting beliefs or hypotheses. It is loosely considered as iteration if the batch size is equal to that of the entire training dataset.

It is also common to randomly shuffle the training data between epochs. Normally at the beginning of the training you would want to gradients to update fast. Because DataSet TrainingSet ValidationSet.

Epoch is once all images are processed one time individually of forward and backward to the network then that is one epoch. The input data can be broken down into batches if it is of a large size. Lets look at a loss function that is commonly used in practice called the mean squared error MSE.

Why we use more than one Epoch. The learning is a parameter that you set at the beginning of the training. Machine learning developers may inadvertently collect or label data in ways that influence an outcome supporting their.

Mean squared error MSE For a single sample with MSE we first calculate the difference the error between the provided output prediction and the label. For each epoch the error is accumulated across all the individual outputs. One epoch is counted when Number of iterations batch size total number of images in training.

An epoch is a term used in machine learning and indicates the number of passes of the entire training dataset the machine learning algorithm has completed. Training a model simply means learning determining good values for all the weights and the bias from labeled examples. Then after a certain amount of step you should decrease the learning rate.

This can be in random order. You an also batch your epoch so that you only pass through a portion at a time. I like to make sure my definition of epoch is correct.

Epoch is one of the Machine Learning terms which indicates the number of iterations or passes an algorithm has completed around the training dataset. To end with Epoch is the complete cycle of an entire training data learned by a neural model. Hopefully this would make the network converge faster.

It is a scale of how big your model should update its weights and biases after every step. In mini batch training you can sub divide the TrainingSet into small Sets and update weights inside an epoch. An epoch is one pass through an entire dataset.

Loss is the result of a bad prediction. An epoch is one complete presentation of the data set to be erudite to a learning machine. Given 1000 datasets it.

If you have 100 images in your train set then one full pass through your training model on. If the models prediction is perfect the loss is zero. Batch size is the total number of training samples present in a single min-batch.

Epoch Vs Iteration When Training Neural Networks Stack Overflow

Machine Learning Steps Vs Epoch Quantum Computing

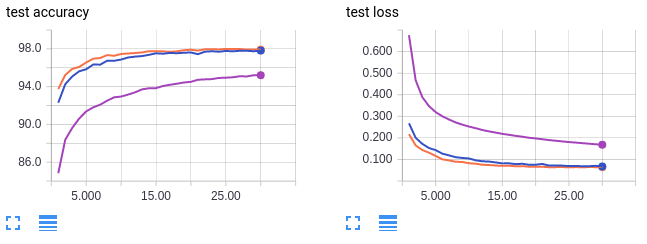

In Which Epoch Should I Stop The Training To Avoid Overfitting Data Science Stack Exchange

Epoch Vs Iteration When Training Neural Networks Stack Overflow

Setting The Learning Rate Of Your Neural Network

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Difference Between A Batch And An Epoch In A Neural Network Simple Accounting

What Is The Difference Between Iterations And Epochs In Convolution Neural Networks Quora

What Is The Difference Between Episode And Epoch In Deep Q Learning Cross Validated

Machine Learning Steps Vs Epoch Quantum Computing

Epoch Iterations Batch Size Difference And Essence By E M Medium

What Is The Difference Between Episode And Epoch In Deep Q Learning Cross Validated

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

Effect Of Batch Size On Training Dynamics By Kevin Shen Mini Distill Medium

What Is Batch Size And Epoch In Neural Network Deeplearning Buzz

Original Batch Iteration Step And Epoch In Machine Learning Programmer Sought

Post a Comment for "Machine Learning Epoch Meaning"