Loss Function Machine Learning Deutsch

In a project if real outcomes deviate from the projections then comes the loss function that will cough up a very large amount. This describes a ReLU function in L 1 L 2.

Neural Networks Structure Machine Learning Crash Course

Gradually with the aid of any optimization function the loss function in machine learning reduces the error in estimation.

Loss function machine learning deutsch. Min L 1 L 2 λ ReLU L 2 L 1 The hyper-parameter λ 0 controls how steep the penalty should be for violating the inequality. 5 minutes Learning Objectives. Understand full gradient descent and some variants.

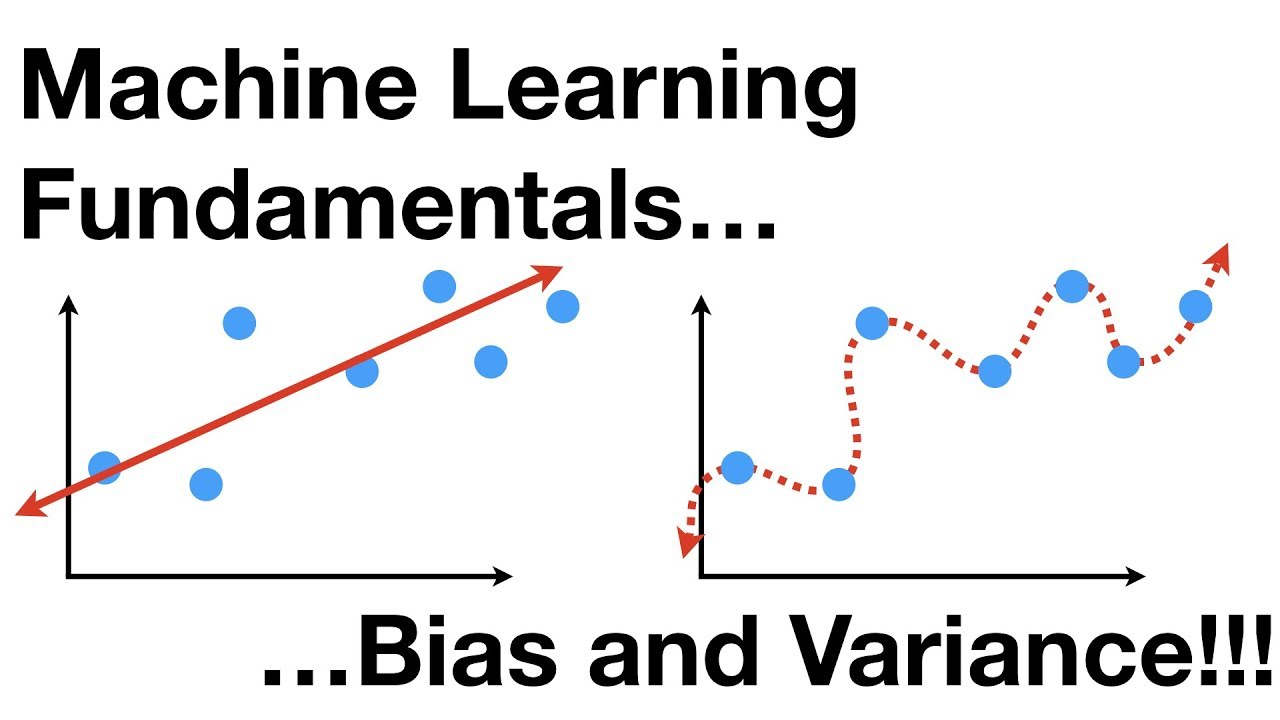

This loss doesnt guarantee that the inequality is satisfied but it is an improvement over minimizing L 1 L 2 alone. In supervised learning a machine learning algorithm builds a model by examining many examples and attempting to find a model that minimizes loss. An iterative approach is one widely used method for reducing loss and is as easy and efficient as walking down a hill.

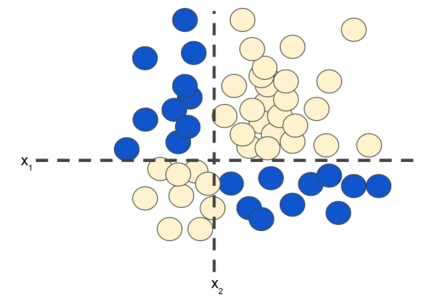

A Loss function characterizes how well the model performs over the training. Typically in machine learning problems we seek to minimize the error between the predicted value vs the actual value. To train a model we need a good way to reduce the models loss.

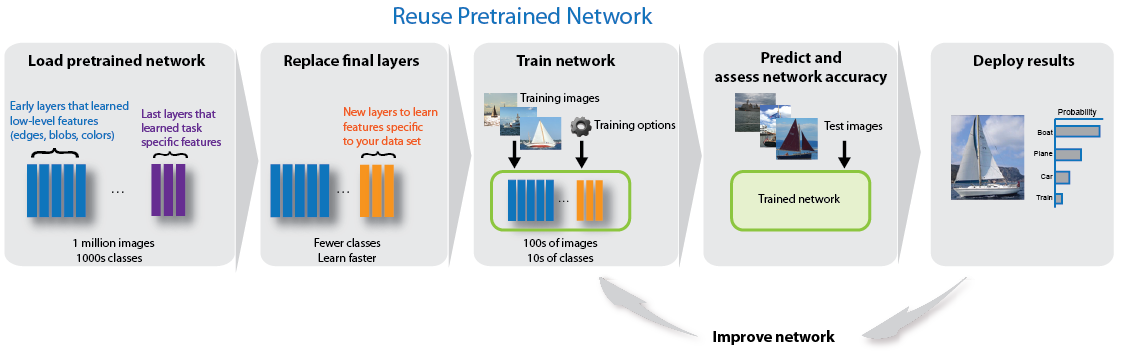

This process is called empirical risk minimization. Perceptual loss functions are used when comparing two different images that look similar like the same photo but shifted by one pixel. The function is used to compare high level differences like content and style discrepancies between images.

If predictions deviates too much from actual results loss function would cough up a very large number. Discover how to train a model using an iterative approach. A perceptual loss function is very similar to the per-pixel loss function as both are used for training feed-forward neural networks for image.

In our loss function teaching framework a teacher model plays the role of outputting loss functions for the student model ie the daily machine learning model to solve a task to minimize. Machines learn by means of a loss function. It is a method of determining how well the particular algorithm models the given data.

If the loss is calculated for a single training example it is called loss or error function. The word loss or error represents the penalty for failing to achieve the expected output. For the optimization of any machine learning model an acceptable loss function must be selected.

This loss is just a composition of functions readily available in modern neural network libraries so its. Gradually with the help of some optimization function loss function learns to reduce the error in prediction. Values outputted by the loss function evaluate the performance of current machine learning model and set the optimization direction for the model parameters.

E y_p-y return tfkerasbackendmeantfkerasbackendmaximumqe q-1e Our example Keras model has three fully connected hidden layers each with one hundred neurons. Its a method of evaluating how well specific algorithm models the given data. 6 minutes Training a model simply means learning determining good values for all the weights and the bias from labeled examples.

The loss function for a quantile q the set of predictions y_p and the actual values y are. Loss is the penalty for a bad. Cost Function or Loss Function or Error In Machine Learning the Cost function tells you that your learning model is good or not or you can say that it used to estimate how badly learning.

Def quantile_lossq y_p y.

Topcoder Gradient Descent In Machine Learning Topcoder

Approaching Almost Any Machine Learning Problem Kaggledays Dubai

Using Machine Learning For Programmatic Product Placement In Tv Advertising Aws For Industries

Deep Learning With Keras Cheat Sheet

Explainable Ai Deep Learning Matlab Simulink

Cheat Sheets For Ai Neural Networks Machine Learning D Learn Artificial Intelligence Machine Learning Artificial Intelligence Machine Learning Deep Learning

Neural Networks Structure Machine Learning Crash Course

The Machine Learning Behind The Autonomous Database Emea Tour Oct 20

Deep Learning With Images Matlab Simulink

Understanding Parallelization Of Machine Learning Algorithms In Apach

Gentle Introduction To The Adam Optimization Algorithm For Deep Learning

Loss Functions For Classification Wikipedia Step Function Learning Theory Learning Problems

Topcoder Gradient Descent In Machine Learning Topcoder

Regularization Part 1 Ridge L2 Regression Youtube

Introduction To Machine Learning The Great Courses Plus

Regularization Part 1 Ridge L2 Regression Youtube

Understanding Parallelization Of Machine Learning Algorithms In Apach

Accelerating Ai For Covid 19 On Microsoft Azure Machine Learning Using Clara Imaging From Nvidia Ngc Dr Ware Technology Services Microsoft Silver Partner

Neural Networks Structure Machine Learning Crash Course

Post a Comment for "Loss Function Machine Learning Deutsch"