Machine Learning Compiler Optimization

We then provide a. Trained on representative bodies of code this approach can predict specific optimization settings which will outperform standard -O3 or -Oz without the huge.

Monthly Ai In San Francisco 11 Machine Learning Compiler And Runtime At Facebook Youtube

Troduces Meta Optimization a methodology for automat-ically fine-tuning compiler heuristics.

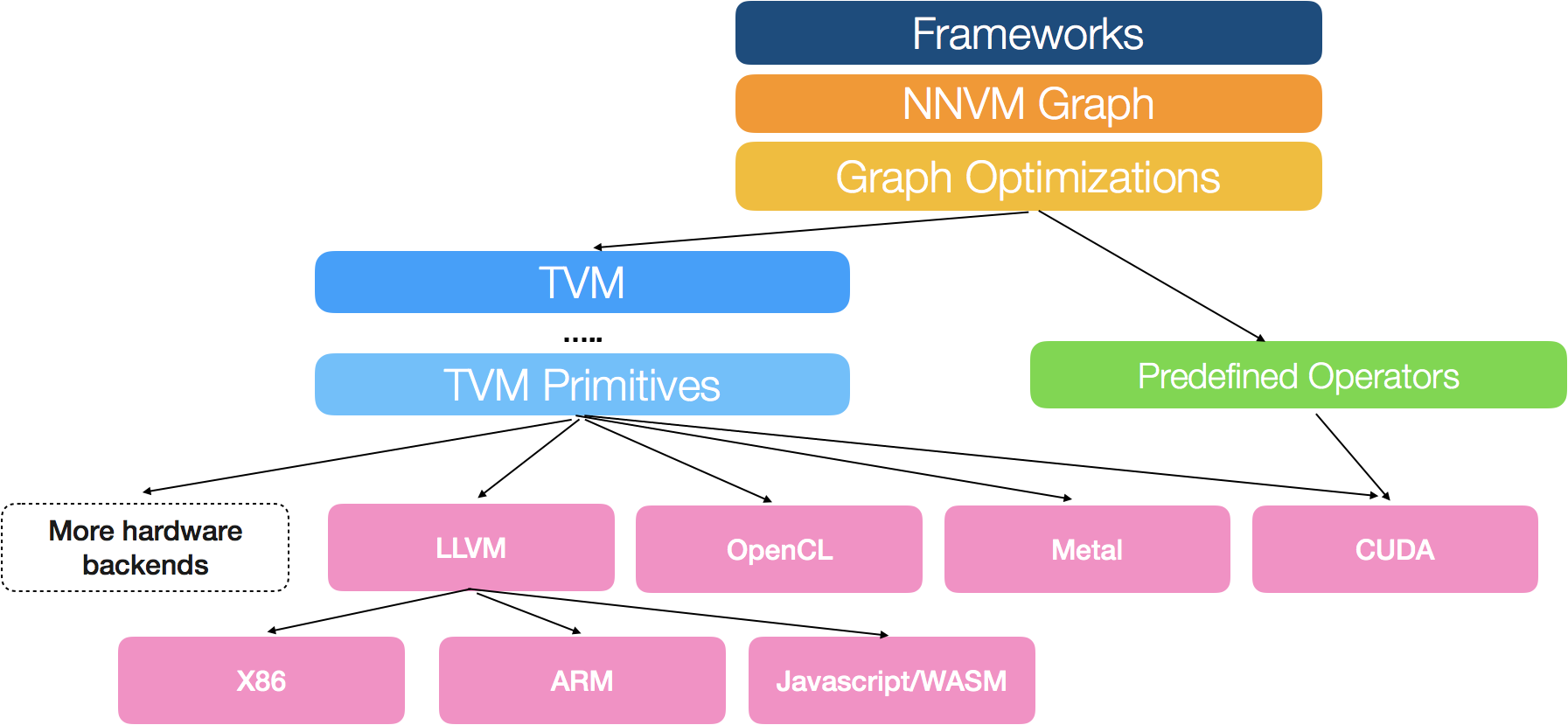

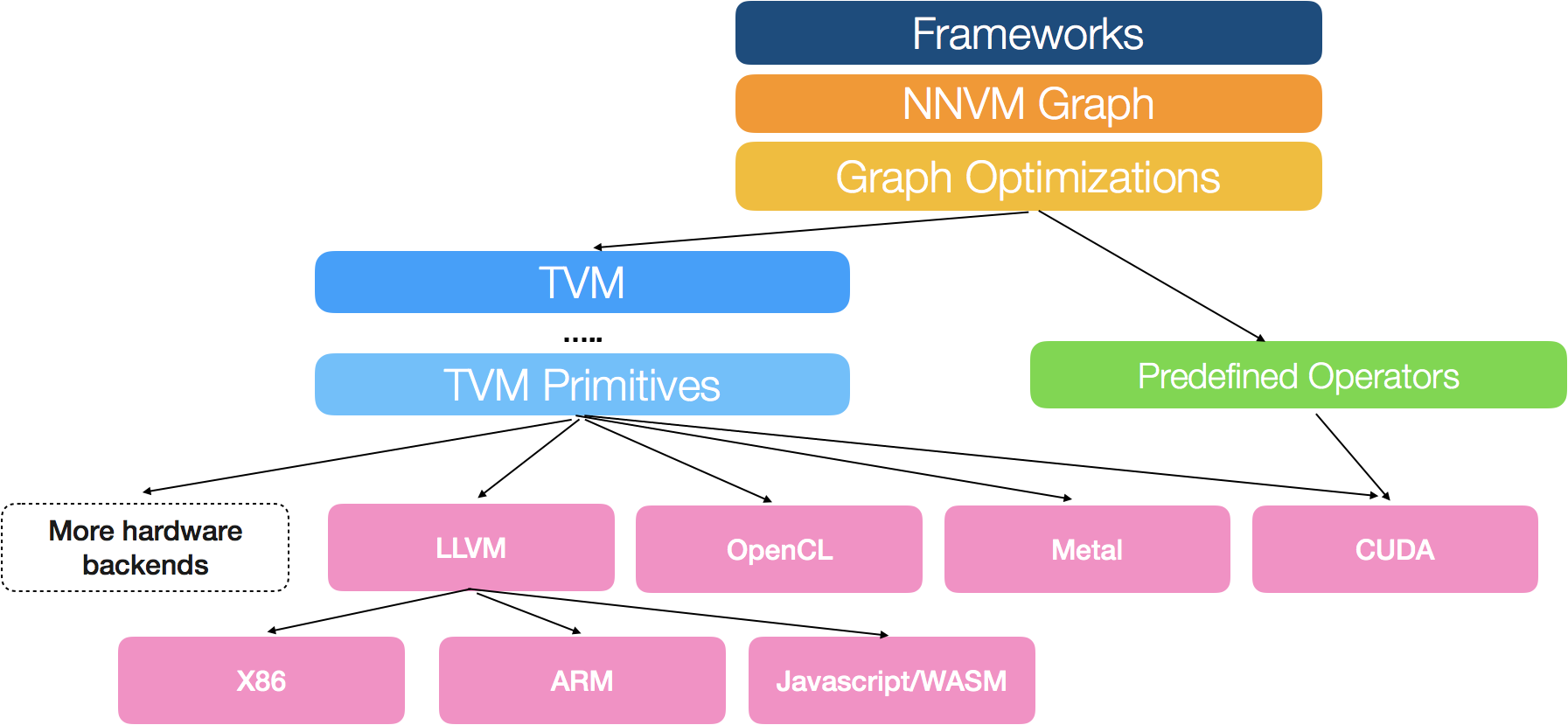

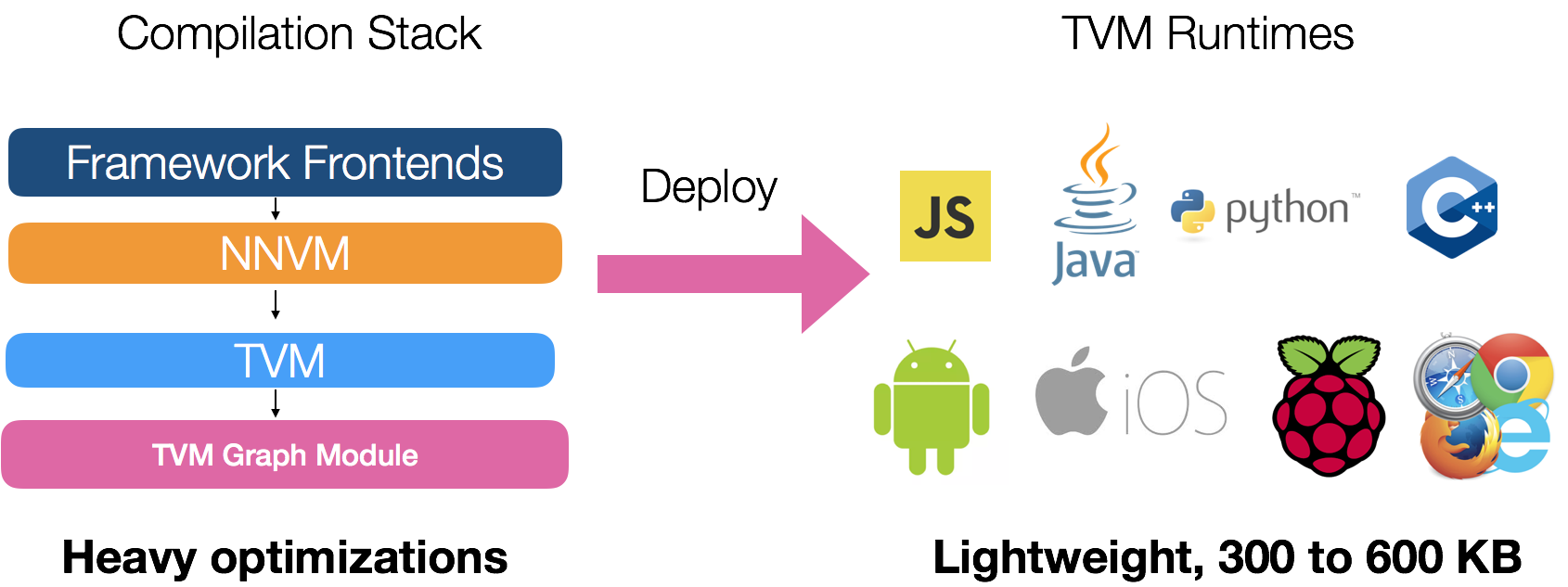

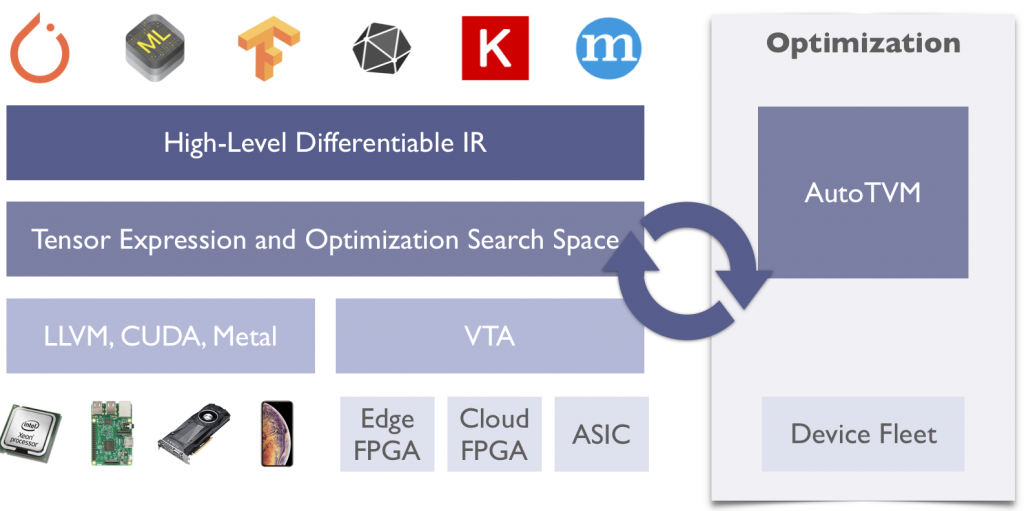

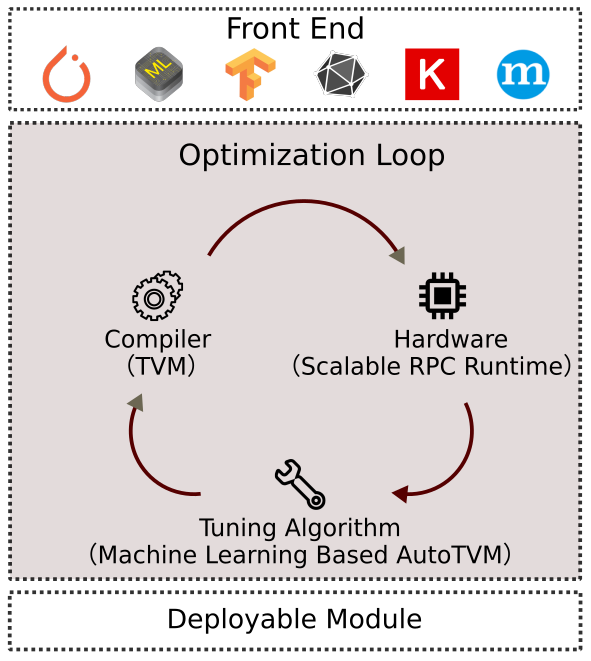

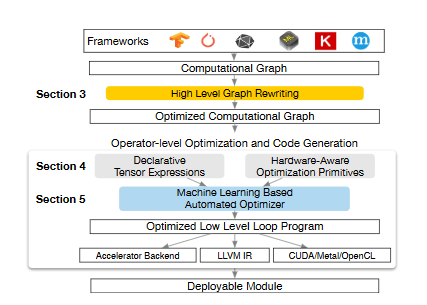

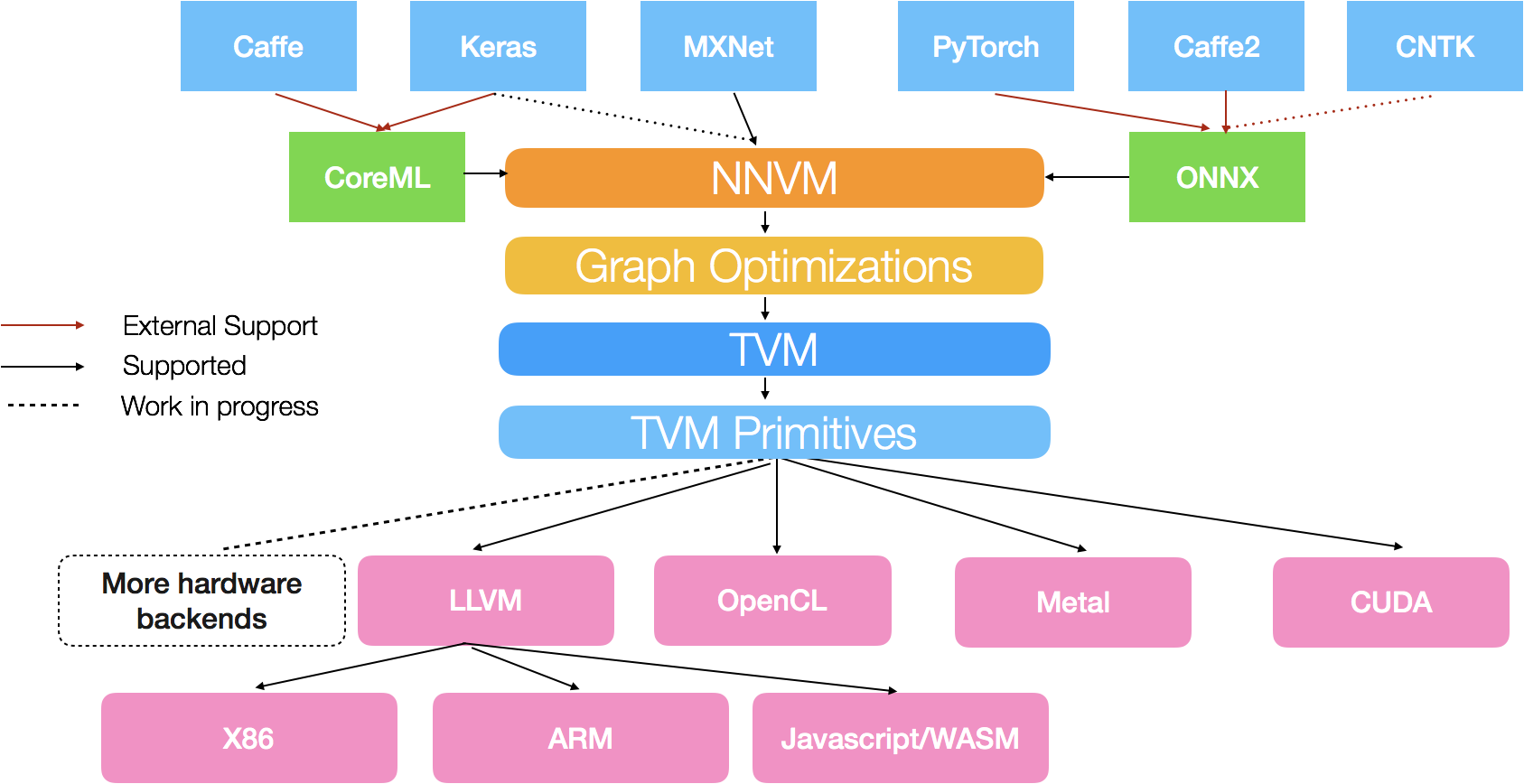

Machine learning compiler optimization. O3 gcc or fast pgi Self-tuning or adaptive methods can be used to optimize. Supported by Innovate UK and working with the University of Bristol and the Science Technology Facilities Council Hartree Center Embecosm has developed MAGEEC a commercially robust machine learning infrastructure for compiler tool chains. We propose TVM a compiler that exposes graph-level and operator-level optimizations to provide performance portability to deep learning workloads across diverse hardware back-ends.

Compiler performance flags selection. TVM solves optimization chal-lenges specific to deep learning such as high-level op-erator fusion mapping to arbitrary hardware primitives and memory latency hiding. Maximum optimization level ie.

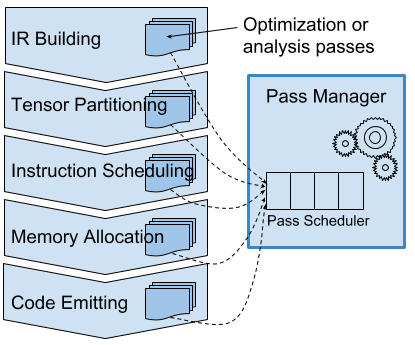

My EECS PhD dissertation talk at UC Berkeley after two years of attendance. One of the key contributors to recent machine learning ML advancements is the development of custom accelerators. The optimization plan of the compiler can be primarily divided into three different logical parts Optimization Selection Optimization Ordering and Optimization Tuning.

In this thesis deep RL and other machine learning methods are applied to solve complex compiler optimization tasks. In the last decade machine-learning-based compilation has moved from an obscure research niche to a mainstream activity. Machine Learning in Compiler Optimization.

Generate a combination of compiler flags that will. The machine-learning-based approach was able to get results that matched the brute-force algorithm in a fraction of the time. We would present our research and specific solutions to improving all three of these steps involved in optimization planning.

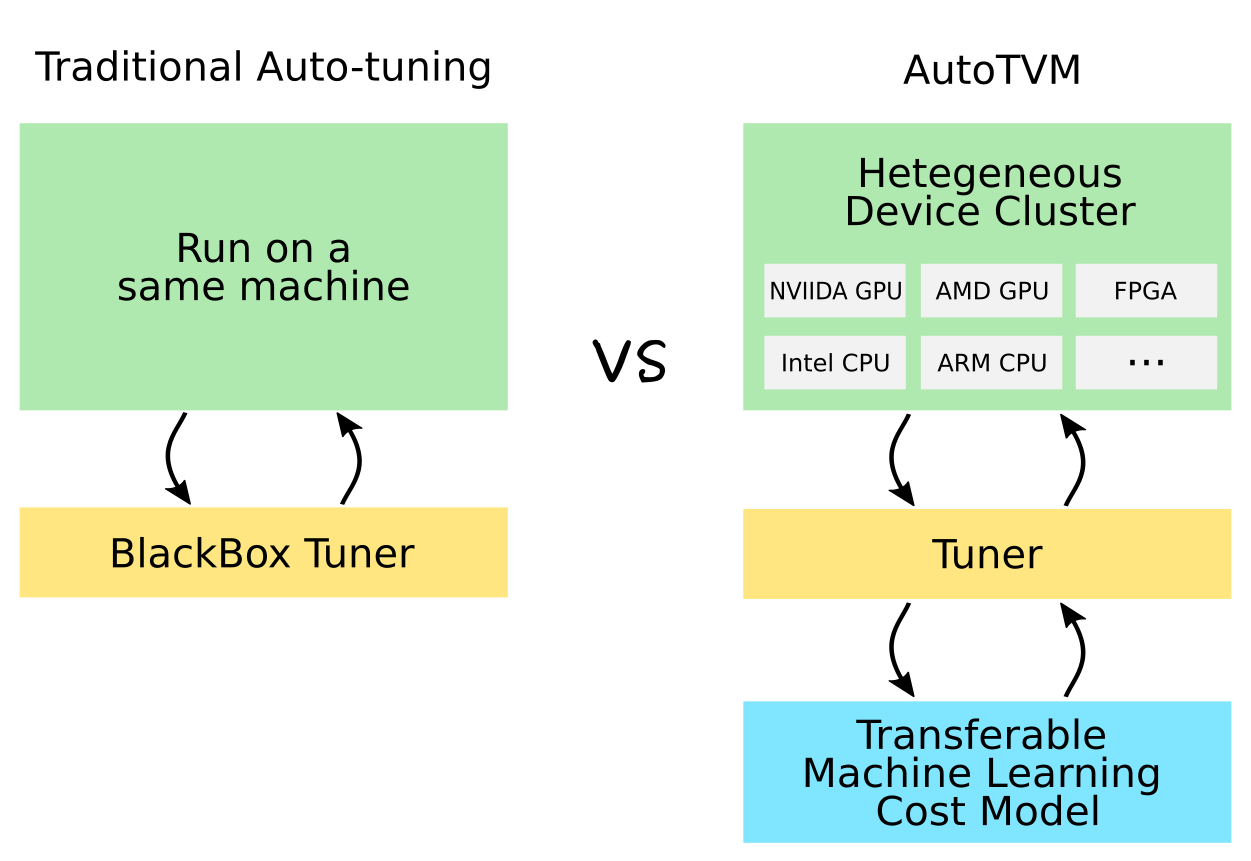

Phase ordering vectorization scheduling and cache allocation. Tuning compiler optimizations for rapidly evolving hardware makes porting and extending an optimizing compiler for each new platform extremely challenging. Our main research areas include statistical and online learning convex and non-convex optimization combinatorial optimization and its applications in AI.

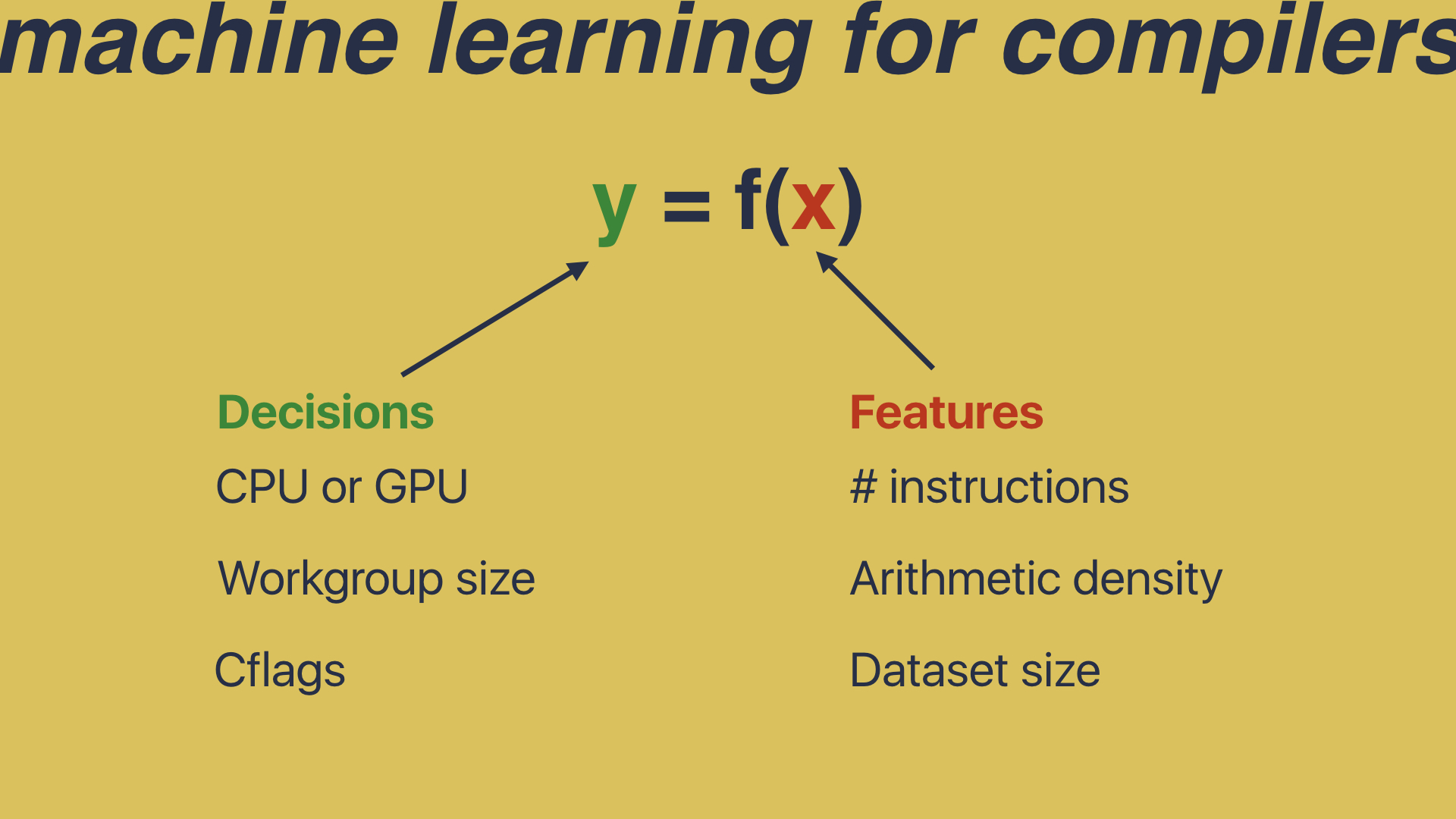

Iterative optimization is a popular approach to adapting programs to a new architecture automatically using feedback-directed compilation. In this paper we describe the relationship between machine learning and compiler optimization and introduce the main concepts of features models training and deployment. Machine learning is part of a tradition in computer sci-ence and compilation in increasing automation The 1950s to 1970s were spent trying to automate compiler translation eg lex for lexical analysis 14 and yacc for parsing 15.

The last decade by contrast has focused on trying to automate. Most compilers just use heuristics instead. The action is the optimization pass to apply next and the reward is the performance improvement.

Minimize Run-time Compile-time Code-size Power-. Rather than relying on expert compiler writers to develop clever heuristics to optimize the code we can let the machine learn how to optimize a compiler to make the machine run faster an approach Now Machine learning relies on a set of quantifiable properties or features to characterize the programs There are many different features that can be used. Inlining doesnt provide much benefit by itself but it enables a.

End-to-end solutions using deep reinforcement learning and other machine learning algorithms are proposed. Inlining is a compiler optimization that replaces a call to a function with the body of that function. The Machine Learning and Optimization group focuses on designing new algorithms to enable the next generation of AI systems and applications and on answering foundational questions in learning optimization algorithms and mathematics.

Ourtechniquesreducecom-piler design complexity by relieving compiler writers of the tedium of heuristic tuning. In this case the machine-learning algorithm isnt identifying candidates for auto-vectorization its simply choosing a candidate identified by a hand-written algorithm. Meta Optimization uses machine-learning techniques to automatically search thespaceofcompiler heuristics.

That this research is an exciting path forward to further explore ML-driven techniques for architecture design and co-optimization eg compiler mapping and scheduling across the computing stack to invent efficient. In addition this approach opens the opportunity to extend that compiler infrastructure with more advanced and domain-specific optimizations such as kernel fusion and compilation to accelerators. We can then put the generated SSA-form adjoint code through a compiler such as LLVM and get all the benefits of traditional compiler optimization applied to both our forward and backwards passes.

These solutions dramatically reduce the search time while capturing the code structure different instructions dependencies and data structures to enable learning a sophisticated model that can better predict the actual performance cost and determine superior compiler optimizations.

On Compilers First Tvm And Deep Learning Conference By Synced Syncedreview Medium

Automatic Kernel Optimization For Deep Learning On All Hardware Platforms

Graph Compilers For Artifical Intelligence Training And Inference Sodalite

Tvm An End To End Ir Stack For Deploying Deep Learning Workloads On Hardware Platforms

Talk Deep Learning In Compilers Chris Cummins

Tvm Stack End To End Optimization For Deep Learning Youtube

A Survey On Compiler Autotuning Using Machine Learning

Nnvm Compiler Open Compiler For Ai Frameworks

Tvm An Automated End To End Optimizing Compiler For Deep Learning

Allen School S Tvm Deep Learning Compiler Framework Transitions To Apache Uw Cse News

Tvm An Automated End To End Optimizing Compiler For Deep Learning Deepai

Deep Learning Compiler And Optimizer Microsoft Research

Swift C Llvm Compiler Optimization By Jacopo Mangiavacchi Medium

Automatic Kernel Optimization For Deep Learning On All Hardware Platforms

On Compilers First Tvm And Deep Learning Conference By Synced Syncedreview Medium

Graph Compilers For Artifical Intelligence Training And Inference Sodalite

Deep Learning Compiler And Optimizer Microsoft Research

Nnvm Compiler Open Compiler For Ai Frameworks

Post a Comment for "Machine Learning Compiler Optimization"