Machine Learning Methods Affected By Feature Scaling

Some statistical learning techniques ie. Normally you do feature scaling when the features in your data have ranges which vary wildly so one objective of feature scaling is to ensure that when you use optimization algorithms such as gradient descent they can converge to a solution or make the convergence quicker.

Linear Regression With Multiple Variables Machine Learning Deep Learning And Computer Vision

This will make it easier to linearly compare features.

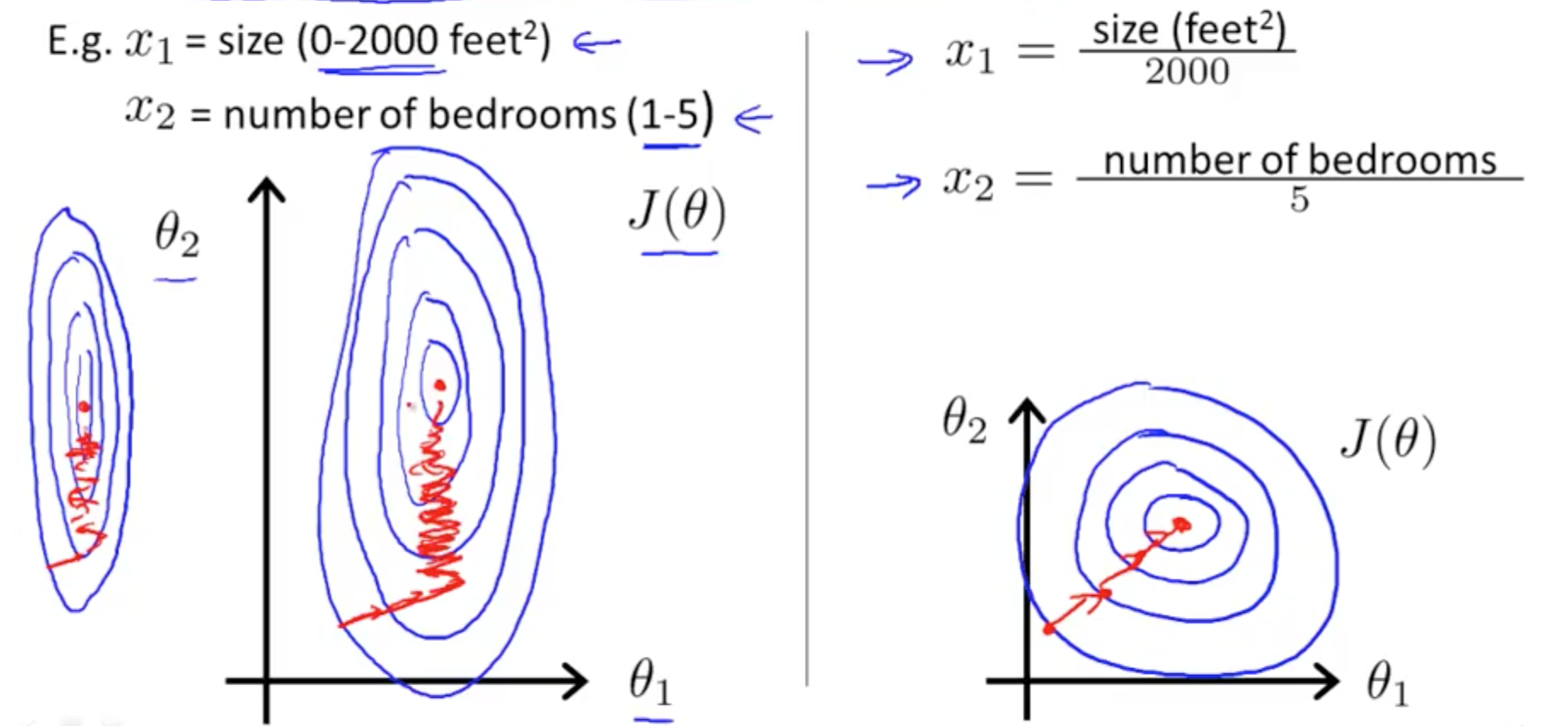

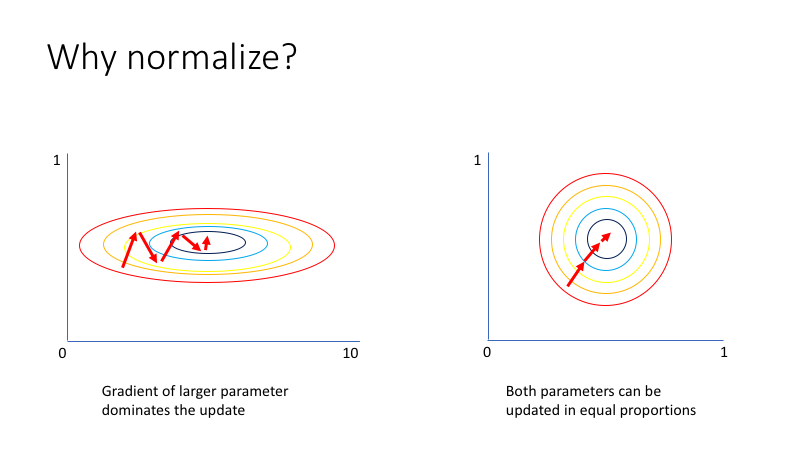

Machine learning methods affected by feature scaling. Take a look at the formula for gradient descent below. Actually its not just algorithm dependent but also depends on your data. Instead if your scaled your feature the contour of the cost function might look like circles.

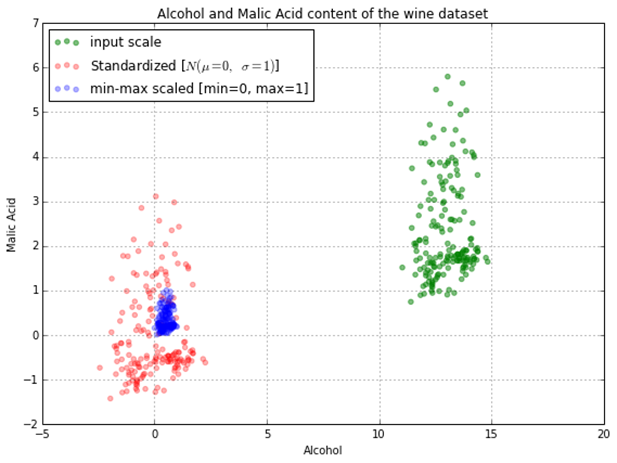

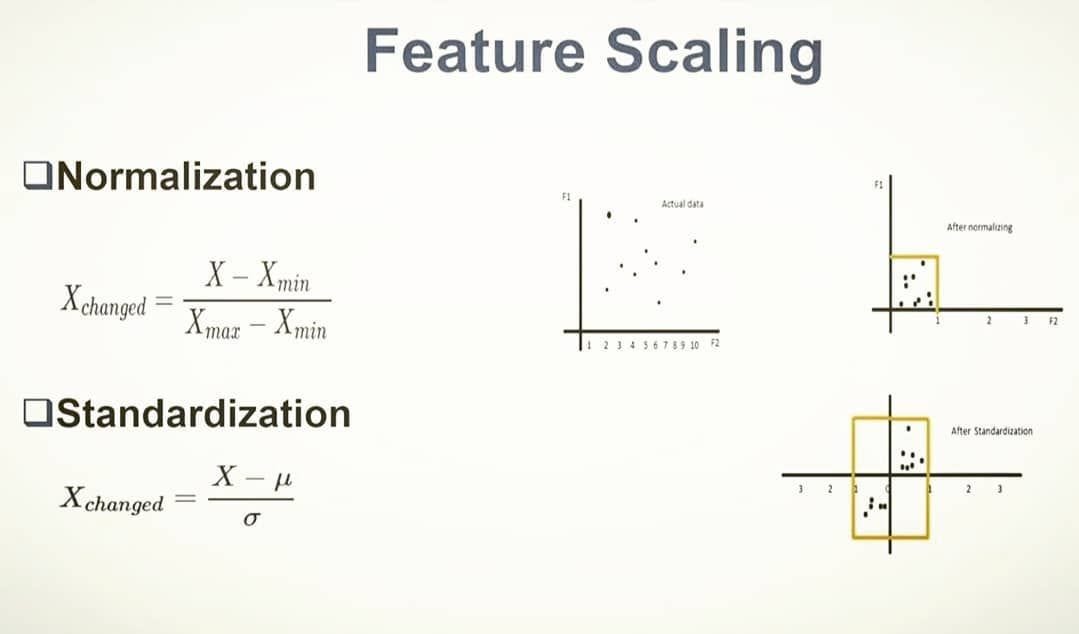

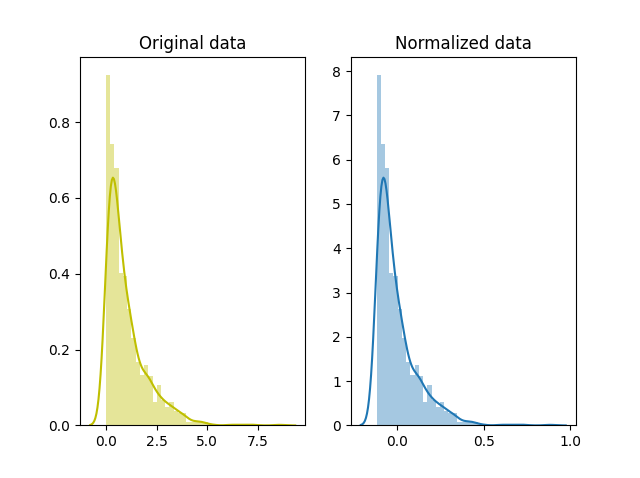

The most common techniques of feature scaling are Normalization and Standardization. In the form of scalar product between data samples such as k-NN and SVM are sensitive to feature transformations. In particular we will look into.

Which machine learning algorithms get affected by feature scaling. The definition is as follows Feature scaling is a method used to normalize the range of independent variables or features of data. There are two types of scaling of your data that you may want to consider.

Feature scaling can have a significant effect on a Machine. But since most of the machine learning algorithms use. The presence of feature value X in the formula will affect the step size of the gradient descent.

Machine learning algorithms like linear regression logistic regression neural network etc. That use gradient descent as an optimization technique require data to be scaled. Naïve-Bayes k-Nearest Neighbor KNN Support Vector Machine SVM Decision Trees Neural Network NN.

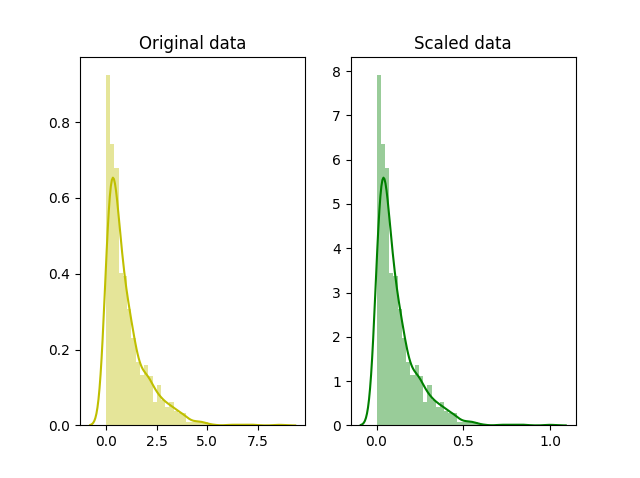

In this project we will investigate how scaling the data impacts the performance of a variety of machine learning algorithms for prediction. Why Scaling Most of the times your dataset wil l contain features highly varying in magnitudes units and range. There are several normalization methods among which the common ones are the Z-score and Min-Max.

Select all that apply Neural Networks. These can both be achieved using the scikit-learn library. Data Scaling Methods.

Principal Component Analysis PCA. Also this a requirement for many models in scikit-learn. To learn which features are not strong predictors -----4 Which of the following supervised machine learning methods are greatly affected by feature scaling.

Your gradients the path of gradient is drawn in red could take a long time and go back and forth to find the optimal solution. And then no feature can dominate other. Then the gradient can take a much.

Examples of Algorithms where Feature Scaling matters 1. Feature Scaling Algorithms will scale Age Salary BHK in fixed range say -1 1 or 0 1. This can make a difference between a weak machine learning model and a strong one.

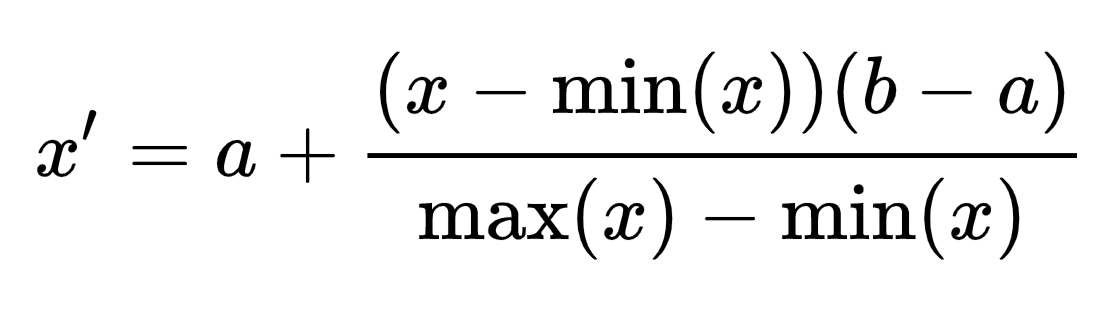

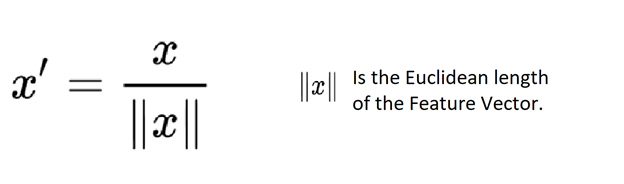

K-Means uses the Euclidean distance measure here feature scaling matters. Scaling can make a difference between a weak machine learning model and a better one. Normalization is used when we want to bound our values between two numbers typically between 01 or -11.

Tries to get the feature with maximum variance here too feature scaling is required. Normalization is a rescaling of the data from the original range so that all values are within the range of 0 and 1. Linear regression where scaling the attributes has no effect may benefit from another preprocessing technique like codifying nominal-valued attributes to some fixed numerical values.

Support Vector Machines. Linear Regression and other linear models. K-Nearest-Neighbours also require feature scaling.

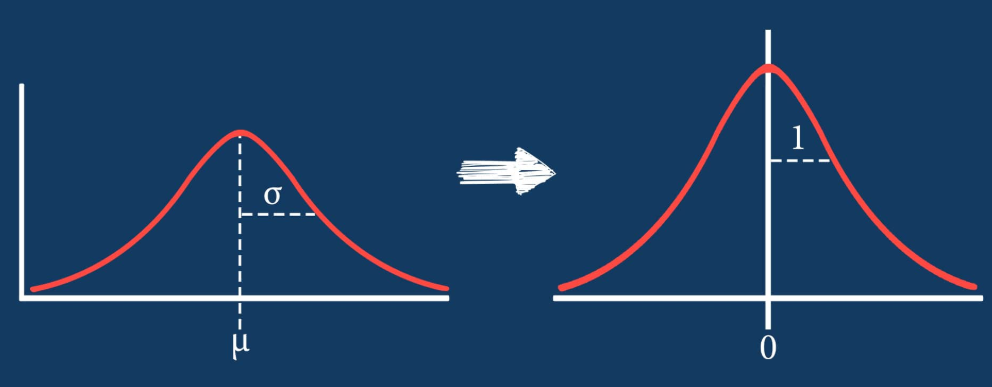

They two most important scaling techniques is Standardization and Normalization. Graphical-model based classifiers such as Fisher LDA or Naive Bayes as well as Decision trees and Tree-based ensemble methods RF XGB are invariant to feature scaling but still it might be a good. In general algorithms that exploit distances or similarities eg.

Feature scaling transforms the features in your dataset so they have a mean of zero and a variance of one. Feature scaling is an important technique in Machine Learning and it is one of the most important steps during the preprocessing of data before creating a machine learning model.

Feature Scaling Standardization Vs Normalization

What Algorithms Need Feature Scaling Beside From Svm Cross Validated

When To Perform A Feature Scaling By Raghav Vashisht Atoti Medium

Normalizing Your Data Specifically Input And Batch Normalization

What Algorithms Need Feature Scaling Beside From Svm Cross Validated

Feature Scaling Standardization Vs Normalization

When To Perform A Feature Scaling By Raghav Vashisht Atoti Medium

When To Perform A Feature Scaling By Raghav Vashisht Atoti Medium

Is It Necessary To Scale The Target Value In Addition To Scaling Features For Regression Analysis Cross Validated

Need And Types Of Feature Scaling By Abhigyan Analytics Vidhya Medium

Feature Scaling Standardization Vs Normalization

Feature Scaling Normalization Standardization And Scaling By Nishant Kumar Analytics Vidhya Medium

What Algorithms Need Feature Scaling Beside From Svm Cross Validated

Feature Scaling Standardization Vs Normalization

Feature Scaling Standardization Vs Normalization

Feature Scaling Standardization Vs Normalization

Normalization Vs Standardization Quantitative Analysis By Shay Geller Towards Data Science

Post a Comment for "Machine Learning Methods Affected By Feature Scaling"